A skilled data engineer can make a massive difference to your organization. They can even help increase the revenue of the business.

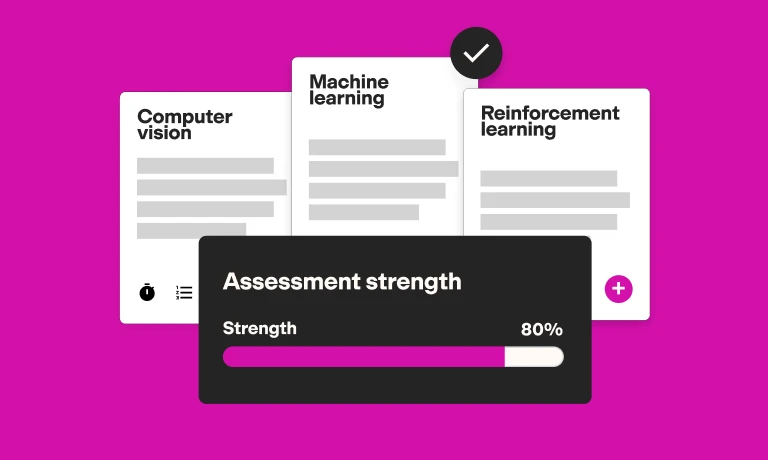

A specific range of data engineering skills is required for candidates to succeed and help your organization handle its data. Therefore, to hire the right engineer candidate, you’ll need to accurately assess candidates’ skills.

One of the best ways to do that is with skills tests, which allow you to get an in-depth understanding of candidates’ qualifications and strengths. After that, you need to invite the best applicants to an interview and ask the right data engineering questions to see who would be the best fit for the role.

Knowing which questions to ask is not an easy feat, but to make this challenge more manageable, we’ve done some of the hard work for you.

Below you’ll find data engineer interview questions you can use in the hiring process, along with sample answers you can expect from your candidates. For more ideas, check out our 55 additional data engineering interview questions.

For the best results, you need to adapt the questions to the role for which you’re hiring.

12 beginner data engineer interview questions

Use the 12 beginner data engineer interview questions in this section to interview junior candidates for your open role.

1. What made you choose a data engineering career?

Sample answer:

My passion for data engineering and computers was apparent from my childhood. I was always fascinated by computers, which led me to choose a degree in computer science.

Since I completed my degree, I’ve been passionate about data and data analytics. I have worked in a few junior data engineering positions, in which I performed well thanks to my education and background. But I’m keen to continue honing my data engineering skills.

2. What made you apply for this role in particular?

Sample answer:

This role would allow me to progress in two fields I want to learn more about—data engineering and the healthcare industry.

I’ve always been fascinated by data engineering and how it can be used in the medical field. I’m particularly interested in its relationship to healthcare technology and software. I also noticed that your organization offers intensive training opportunities, which would allow me to grow in the role.

3. How would you define what data engineering is?

Sample answer:

Data engineering is the process of making transformations to and cleansing data. It also involves profiling and aggregating data. In other words, data engineering is all about data collection and transforming raw data gathered from several sources into information that is ready to be used in the decision-making process.

4. What are data engineers responsible for?

Sample answer:

Data engineers are responsible for data query building, which might be done on an ad-hoc basis.

They are also responsible for maintaining and handling an organization’s data infrastructure, including their databases, warehouses, and pipelines. Data engineers should be capable of converting raw data into a format that allows its analysis and interpretation.

5. Which crucial technical skills are needed to be successful in a data engineer role?

Sample answer:

Some of the critical skills required to be successful in a data engineer role include an in-depth understanding database systems, a solid knowledge of machine learning and data science, programming skills in different languages, an understanding of data structures and algorithms, and the ability to use APIs.

6. Which soft skills are required to be successful in a data engineer role?

Sample answer:

For me, some of the essential soft skills useful for data engineers include critical thinking skills, business knowledge and insight, cognitive flexibility, and the ability to communicate successfully with stakeholders (either verbally or in writing).

7. Which critical frameworks and apps are used by data engineers?

Sample answer:

Three of the essential applications used by data engineers include Hadoop, Python, and SQL.

I’ve used each of these in my previous role, in addition to a range of frameworks such as Spark, Kafka, PostgreSQL, and ElasticSearch. I’m comfortable using PostgreSQL. It’s easy to use, and its PostGIS extension makes it possible to use geospatial queries.

To assess candidates’ experience with Apache Kafka, you can use our selection of the best Kafka interview questions.

8. Can you describe the difference between the role of a data architect and a data engineer?

Sample answer:

Whereas data architects handle the data they receive from several different sources, data engineers focus on creating the data warehouse pipeline. Data engineers also have to set up the architecture that lies behind the data hubs.

9. What is your process when working on a data analysis project?

Sample answer:

I follow a specific process when working on a new data analysis project.

First, I try to get an understanding of the scope of the whole project to learn what it requires. I then analyze the critical details behind the metrics and then implement my knowledge of the project to create and build data tables that have the right granularity level.

10. How would you define data modeling?

Sample answer:

Data modeling involves producing a representation of the intricate software designs and presenting it in layman’s terms. The representation would show the data objects and the specific rules that pertain to them. The visual representations are basic, meaning that anyone can interpret them.

11. How would you define big data?

Sample answer:

Big data refers to a vast quantity of data that might be structured or unstructured. With data like this, it’s usually tricky to process it with traditional approaches, so many data engineers use Hadoop for this, as it facilitates the data handling process.

12. What is the difference between unstructured and structured data?

Sample answer:

Some key differences between structured and unstructured data are:

Structured data requires an ELT integration tool and is stored in a DBMS (database management system) or tabular format

Unstructured data uses a data lake storage approach that takes more space than structured data

Unstructured data is often hard to scale, while structured data is easily scalable

27 intermediate data engineer interview questions

Choose from the following 27 intermediate data engineering interview questions to evaluate a mid-level data engineer for your organization.

1. Can you explain what a snowflake schema is?

Sample answer:

Snowflake schemas are called so because the layers of normalized tables in them look like a snowflake. It has many dimensions and is used to structure data. Once it has been normalized, the data is divided into additional tables in the snowflake schema.

2. Can you explain what a star schema is?

Sample answer:

A star schema, also referred to as the star join schema, is a basic schema that is used in data warehousing.

Star schemas are called like this because the structure appears similar to a star that features tables, both fact and associated dimension ones. These schemas are ideal for vast quantities of data.

3. What is the difference between a star schema and a snowflake schema?

Sample answer:

Whereas star schemas have a simple design and use fast cube processing, snowflake schemas use an intricate data handling storage approach and slow cube processing.

With star schemas, hierarchies are stored in tables, whereas with snowflake schemas, the hierarchies are stored in individual tables.

4. What is the difference between a data warehouse and an operational database?

Sample answer:

If you use operational databases, your main focus is manipulating data and deletion operations. In contrast, if you use data warehousing, your primary aim is to use aggregation functions and carry out calculations.

5. Which approach would you use to validate data migration between two databases?

Sample answer:

Since different circumstances require different validation approaches, it’s essential to choose the right one. In some cases, a basic comparison might be the best approach to validate data migration between two databases. In contrast, other situations might require a validation step after the migration has taken place.

6. What is your experience with ETL? Which is your preferred ETL tool?

Sample answer:

I’ve used several ETL tools in my career. In addition to SAS Data Management and Services, I have also used PowerCenter.

Of these, my number one choice would be PowerCenter because of its ease of access to data and the simplicity with which you can carry out business data operations. PowerCenter is also very flexible and can be integrated with Hadoop.

7. Can you explain how you can increase the revenue of a business using data analytics and big data?

Sample answer:

There are a few ways that data analytics and big data help to increase the revenue of a business. The efficient use of data can:

Improve the decision making process

Help keep costs low

Help organizations set achievable goals

Enhance customer satisfaction by anticipating needs and personalizing products and services

Mitigate risk and enhance fraud detection

8. Have you used skewed tables in Hive? What do they do?

Sample answer:

I’ve often used skewed tables in Hive. With a skewed table specified as such, the values that frequently appear (known as heavy skewed values) are divided into many individual files. All the other values go to a separate file. The result is enhanced performance and efficient processing.

9. What are some examples of available components in the Hive data model?

Sample answer:

Some of the critical components of the Hive data model include:

Tables

Partitions

Buckets

It’s possible to categorize data into these three categories.

10. What does the .hiverc file do in Hive?

Sample answer:

The .hiverc file is loaded and executed upon launching the shell. It’s beneficial for adding a Hive configuration such as a header of a column (and make it appear in query results) or a jar or file. A .hiverc extension also lets you set the parameters’ values in a .hiverc file.

11. Can you explain what SerDe means in Hive?

Sample answer:

There are several SerDe implementations in Hive, some of which include:

DelimitedJSONSerDe

OpenCSVSerDe

ByteStreamTypedSerDe

It’s possible to write a custom SerDe implementation as well.

12. Which collection data types does Hive support?

Sample answer:

Some of the critical collection functions or data types that Hive can support include:

Map

Struct

Array

While arrays include a selection of different elements that are ordered, and map includes key-value pairs that are not ordered, struct features different types of elements.

13. Can you explain how Hive is used in Hadoop?

Sample answer:

The Hive interface facilitates data management for data that is stored in Hadoop. Data engineers also use Hive to map and use HBase tables. Essentially, you can use Hive with Hadoop to read data through SQL and handle data petabytes with it.

14. Are you aware of the functions used for table creation in Hive?

Sample answer:

To my knowledge, there are a few functions used for table creation in Hive, including:

JSON_tuple()

Explode(array)

Stack()

Explode(map)

15. Can you explain what COSHH means?

Sample answer:

This five-letter acronym refers to cluster and application-level scheduling that helps enhance a job’s completion time. COSHH stands for classification optimization scheduling for heterogeneous Hadoop systems.

16. Can you explain what FSCK means?

Sample answer:

FSCK, which is also referred to as a file system check, is a critical command. Data engineers use it to assess if there are any inconsistencies or issues in files.

17. What is Hadoop?

Sample answer:

The Hadoop open source framework is ideal for manipulating data and storing data. It also helps data engineers run apps on clusters, and it facilitates the big data handling process.

18. What are the advantages of Hadoop?

Sample answer:

Hadoop allows you to handle a vast amount of data from new sources. There’s no need to spend extra on data warehouse maintenance with Hadoop, and it also helps you access structured and unstructured data. Hadoop 2 also can be scaled, reaching 10,000 nodes for each cluster.

19. Why is the distributed cache important in Apache Hadoop?

Sample answer:

The distributed cache feature of Apache Hadoop is convenient. It’s crucial for enhancing a job’s performance and is responsible for file caching. To put it another way, it caches the applications’ files and can handle read-only, zip, and jar files.

20. What are the core features of Hadoop?

Sample answer:

For me, some of the essential features of Hadoop include:

Cluster-based data storage

Replica creation

Hardware compatibility and versatility

Rapid data processing

Scalable clusters

21. How would you define Hadoop streaming?

Sample answer:

The Hadoop streaming utility permits data engineers to create Map/Reduce jobs. With Hadoop streaming, the jobs can then be submitted to a specific cluster. Map/Reduce jobs can be run with a script thanks to Hadoop streaming.

22. How familiar are you with blocks and block scanner concepts? What do they do?

Sample answer:

A block is the tiniest unit that data files are composed of, which Hadoop will render by dividing larger files into small units. A block scanner is used to verify which blocks or tiny units are found in the DataNode.

23. Which steps would you use to deploy big data solutions?

Sample answer:

The three steps I would use to deploy big data solutions are:

Ingest and extract the data from each source, such as Oracle or MySQL

Store the data in HDFS or HBase

Process the data by using a framework such as Hive or Spark

24. Which modes are you aware of in Hadoop?

Sample answer:

I have working knowledge of the three main Hadoop modes:

Fully distributed mode

Standalone mode

Pseudo-distributed mode

While I’d use standalone mode for debugging, the pseudo-distributed mode is used for testing purposes, specifically when resources are not a problem, and the fully-distributed mode is used in production.

25. Which approaches would you use to increase security in Hadoop?

Sample answer:

There are a few things I’d do to enhance the security level of Hadoop:

Enable the Kerberos encryption, which is an authentication protocol designed for security purposes

Configure the transparent encryption (a step that ensures the data is read from specific HDFS directories)

Use tools such as the REST API secure gateway Knox to enhance authentication

26. Can you explain what data locality means in Hadoop?

Sample answer:

Since data contained in an extensive data system is so large, shifting it across the network can cause network congestion.

This is where data locality can help. It involves moving the computation towards the location of the actual data, which reduces the congestion. In short, it means the data is local.

27. What does the combiner function help you achieve in Hadoop?

Sample answer:

The combiner function is essential for keeping network congestion low. It’s referred to as a mini-reducer and processes optimized Map/Reduce jobs, helping data engineers to aggregate data at this stage.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

23 advanced data engineer interview questions

Below, you’ll find 23 advanced data engineering interview questions to gauge the proficiency of your senior-level data engineering candidates. Select the ones that suit your organization and the role you’re hiring for.

1. What does ContextObject do in Hadoop, and why is it important?

Sample answer:

I use ContextObject to enable the Mapper/Reducer to interact with systems in Hadoop. It’s also helpful in ensuring that critical information is accessible while map operations are taking place.

2. Can you name the different Reducer phases in Hadoop? What does each of these do?

Sample answer:

The three Reducer phases in Hadoop are:

Setup()

Cleanup()

Reduce()

I use setup() to configure or adjust specific parameters, including how big the input data is, cleanup() for temporary file cleaning, and reduce() for defining which task needs to be done for values of the same key.

3. What does secondary NameNode do? Can you explain its functions?

Sample answer:

If I wanted to avoid specific issues with edit logs, which can be challenging to manage, secondary NameNode would allow me to achieve this. It’s tasked with merging the edit logs by first acquiring them from NameNode, retrieving a new FSImage, and finally using the FSImage to lower the startup time.

4. Can you explain what would occur if NameNode were to crash?

Sample answer:

In case NameNode crashes, the company would lose a vast quantity of metadata. In most cases, the FSImage of the secondary NameNode can help to re-establish the NameNode.

5. How are NAS and DAS different in Hadoop?

Sample answer:

Whereas NAS has a 109 to 1012 storage capacity, a reasonable cost in terms of management per GB, and uses ethernet to transmit data, DAS has a 109 storage capacity, it has a higher price in terms of management per GB, and uses IDE to transmit data.

6. What is a distributed file system in Hadoop?

Sample answer:

A [distributed file system](https://www.techopedia.com/definition/1825/distributed-file-system-dfs#:~:text=A%20distributed%20file%20system%20(DFS,a%20controlled%20and%20authorized%20way.) in Hadoop is a scalable system that was designed to help it run effortlessly on big clusters. It stores the data contained in Hadoop and, to make this easier, its bandwidth is high. The system helps to upkeep the data’s quality.

7. Can you explain what *args means?

Sample answer:

The *args command is used to define a function that is ordered and helps you use any number or quantity of arguments you wish to pass; *args stands for arguments.

8. Can you explain what **kwargs means?

Sample answer:

The **kwargs command is used to define and represent a function that has unordered arguments. It lets you use any number or quantity of arguments by declaring variables; **kwargs means keyword arguments.

9. What are the differences between tuples and lists?

Sample answer:

Both tuples and lists are data structure classes, but there are a couple of differences between them.

Whereas tuples can’t be edited or altered and are immutable, it is possible to edit a list that is mutable. This means that certain operations may work when used with lists, but may not work with tuples.

10. In SQL queries, what approach would you use to handle duplicate data points?

Sample answer:

The main way to handle duplicate data points is to use specific keywords in SQL. I’d use DISTINCT and UNIQUE to lower the duplicate points. However, other methods are available to handle duplicate points as well, such as using GROUP BY keywords.

11. What are the advantages of working with big data in the cloud?

Sample answer:

Many organizations are transitioning to the cloud—and for a good reason.

For me, there are plenty of reasons why working with big data in the cloud is beneficial. Not only can you access your data from any location, but you also have the advantage of accessing backup versions in urgent scenarios. There’s the added benefit that scaling is easy.

12. What are some drawbacks of working with big data in the cloud?

Sample answer:

A few drawbacks of working with big data in the cloud include that security can be an issue, and that data engineers can face technical issues. There are rolling costs to consider and you might not have much control over the infrastructure.

13. Which area is your primary focus—databases or pipelines?

Sample answer:

Since I have mainly worked in startup teams, I have experience with both databases and pipelines.

I’m capable of using each of these components and I’m also able to use data warehouse databases and data pipelines for larger quantities of data.

14. If you have an individual data file, is it possible to create several tables for it?

Sample answer:

If you wanted to create several tables for one individual data file, it can be done. In the Hive metastore, the schemas can be stored, meaning you can receive the related data’s results without any difficulty or problems.

15. Can you describe what happens if a data block is corrupted and the block scanner detects it?

Sample answer:

There are a few things that happen when corrupted data blocks are detected by a block scanner.

Initially, the DataNode will report to NameNode regarding the block that is corrupted, Then, NameNode starts to make a replica by utilizing the blocks that are already in another DataNode.

Once the replica is made and checked to ensure it is equal to the replication factor, the corrupted block will be deleted.

16. How would you explain what file permissions are in Hadoop?

Sample answer:

In Hadoop, a permissions model is used, which enables files’ permissions to be managed. Different user classes can be used, such as “owner,” “group,” or “others”.

Some of the specific permissions of user classes include “execute,” “write,” and “read,” where “write” is a permission to write a file and “read” is to have the file read.

In a directory, “write” pertains to the creation or deletion of a directory, while “read” is a permission to list the directory’s contents. “Execute” gives access to the directory’s child. Permissions are important as they either give access or deny requests.

17. How would you change the files in arbitrary locations in Hadoop?

Sample answer:

Although in arbitrary offsets Hadoop doesn’t permit modifications for files, a single writer can write a file in a format known as append-only. Any writes made to a file in Hadoop are carried out at the end of a file.

18. Which process would you follow to add a node to a cluster?

Sample answer:

I’d start by adding the IP address or host name in dfs.hosts.slave file. I would then carry out a cluster refresh using $hadoop dfsadmin -refreshNodes.

19. How does Python assist data engineers?

Sample answer:

Python is useful for creating data pipelines. It also enables data engineers to write ETL scripts, carry out analyses, and establish statistical models. So, it is critical for analyzing data and ETL.

20. Can you explain the difference between a relational and non-relational database?

Sample answer:

Relational databases, or RDBSM, include Oracle, MySQL, and IBM DB2 databases.Non-relational databases, referred to as NoSQL, and include Cassandra, Coachbase, and MongoDB.

An RDBSM is normally used in larger enterprises to store structured data, while non-relational databases are used for the storage of data that doesn’t have a specific structure.

21. Could you list some Python libraries that can facilitate efficient data processing?

Sample answer:

Some of the Python libraries that can facilitate the efficient processing of data include:

TensorFlow

SciKit-Learn

NumPy

Pandas

22. Could you explain what rack awareness means?

Sample answer:

Rack awareness in Hadoop can be used to boost the bandwidth of the network. Rack awareness describes how a NameNode can keep the rack id of a DataNode in order to gain rack information.

Rack awareness helps data engineers improve network bandwidth by selecting DataNodes that are closer to the client who has made the read or write request.

23. Can you explain what Heartbeat messages are?

Sample answer:

In Hadoop, the passing of signals between NameNode and data node is labelled Heartbeat. The signals are sent at regular intervals to show that the NameNode is still present.

During which stage of the hiring process should you use data engineering interview questions?

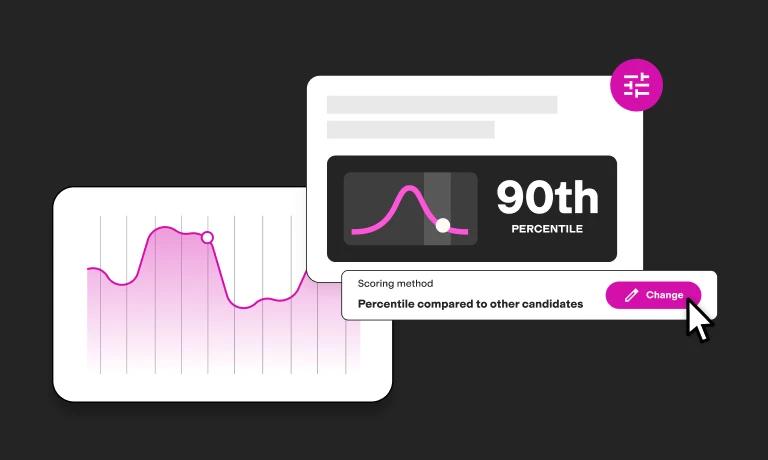

If you’re using skills tests (which can significantly lower the time to hire), use the above data engineering interview questions after you’ve received the results of the assessments.

Taking this approach is beneficial as you can filter out unsuitable candidates, avoid interviewing candidates who do not have the required skills, and concentrate on the most promising applicants.

What’s more, the insights you gain from skills assessments can help you improve the interview process and help you gain a deeper understanding of your candidates’ skills when interviewing them.

Combine data engineer interview questions and skills assessments to hire the perfect fit

You’re now ready to hire the right data engineer for your organization!

We recommend that you use the right interview questions that reflect your organization’s needs and the requirements of the role. And, if you need to assess applicants’ proficiency in Apache Spark, check out our selection of the best Spark interview questions.

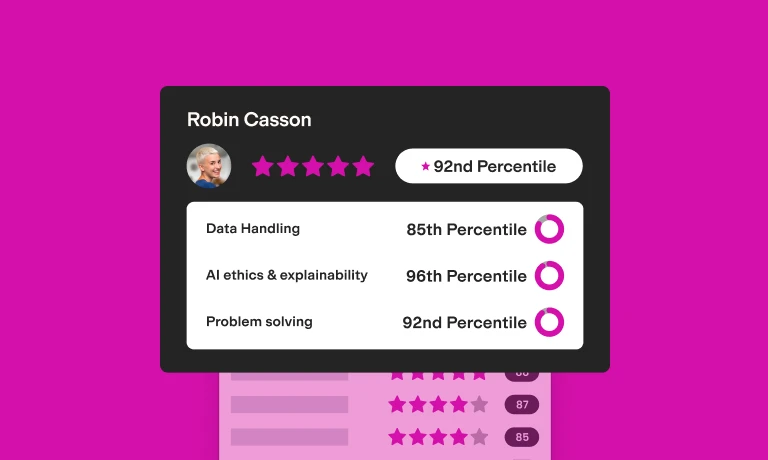

The right interview questions, in combination with skills assessments for a data engineer role, can help you find the best fit for your business by enabling you to:

Make sound hiring decisions

Validate your candidates’ skills

Reduce unconscious bias

Speed up hiring

Optimize recruitment costs

After attracting candidates with a strong data engineer job description, combine the data engineering interview questions in this article with a thorough skills assessment to hire top talent. Using these approaches can help guarantee that you’ll find exceptional data engineers for your organization.

With TestGorilla, you’ll find the recruitment process to be simpler, faster, and much more effective. Get started for free today and start making better hiring decisions, faster and bias-free.

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.