15 tricky Docker interview questions and answers

Docker is a software platform that simplifies the process of building, running, managing, and distributing applications.

Hiring the wrong candidate for a Docker specialist role could lead to inefficient container management, flawed application deployment, and a negative impact on your system’s overall productivity. Evaluating every candidate thoroughly is of utmost importance for these roles.

This guide discusses how to test Docker specialists on their expertise in an interview. We’ve listed 15 tricky Docker interview questions to help you assess your candidates’ practical knowledge, theory, and ability to adapt in various situations.

Try pairing these interview questions with TestGorilla’s Docker test for a more comprehensive evaluation.

What are tricky Docker interview questions?

The purpose of tricky Docker interview questions is to assess the depth of your candidates’ understanding of Docker, which is essential for roles that require advanced Docker knowledge.

For context, Docker is an open-source platform used to automate the deployment, scaling, and management of applications by encapsulating them into containers.

While ordinary Docker interview questions cover fundamental principles, tricky questions get into the nitty-gritty, often including theory-based practical knowledge and scenario-based problems.

These questions help assess a candidate’s ability to handle complex tasks, troubleshoot under pressure, and apply their Docker knowledge for real-world problem-solving.

Why include tricky Docker questions in your interviews?

While a Docker test measures a candidate’s technical proficiency, including tricky questions in an interview lets you observe how candidates tackle complex problems and articulate their thought processes.

Challenging Docker interview questions can sort out the candidates who really understand Docker beyond textbook knowledge. Open-ended questions provide a broader perspective of a candidate’s skills, such as critical thinking, problem-solving, and how they communicate complex ideas.

Docker roles often mean working to tight deadlines in high-pressure situations. Specialists ensure applications function smoothly, receive timely updates, and maintain software stability. A complex Docker question with a time constraint can provide insight into how a candidate handles such circumstances.

Pairing these Docker interview questions with TestGorilla’s personality tests, such as the Motivation or Enneagram tests, gives you a holistic candidate profile and a well-rounded idea of how they will fit within your team.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

15 tricky Docker interview questions and answers

For entry-level Docker roles, we recommend starting with our 50 Docker interview questions to ask developers. Below are 15 questions that go deeper, asking candidates to demonstrate their expertise.

Bear in mind that with open-ended questions, there’s often more than one “correct” answer, depending on the specifics of a situation or the project’s complexity. These questions give your candidates the opportunity to show how much they know.

1. How does Docker differ from traditional virtualization technologies like VMware or VirtualBox?

Answer:

Docker and traditional virtualization technologies like VMware or VirtualBox differ in their approach to isolation.

VirtualBox and VMware use a hypervisor to run multiple full-fledged virtual machines, each with its own operating system. This can be resource intensive, as every virtual machine needs its own CPU, disk, and memory resources.

In contrast, Docker uses containerization, an operating system-level virtualization method. Rather than creating a full operating system, Docker allows multiple applications to share the same OS kernel, effectively segregating them into separate containers.

Each container houses an application and its dependencies but shares the host OS. This makes Docker containers lightweight and fast, as they don’t need to boot an entire OS.

Docker’s approach leads to efficient resource use and quick startup times compared with traditional virtualization technologies.

2. Explain the components of a Docker container and the purpose of each. For example, images, containers, Docker daemon, and Docker CLI.

Answer:

A Docker container has several components.

Images are read-only templates used to create Docker containers. They include the application and all its dependencies but exclude the host-specific settings, such as mounted directories from the host OS and networking information.

Containers are the runnable instances of Docker images. You can start, stop, move, or delete a container using Docker API or CLI. Each container is isolated from others and the host system.

Docker daemon (dockerd) is a background service that manages Docker containers. It listens for Docker API requests and handles Docker functionalities like building, distributing, and running Docker containers.

Docker CLI (Command Line Interface) is the primary user interface for Docker. Through Docker CLI, users can interact with Docker daemons to manage Docker objects like images, containers, networks, and volumes.

3. What is the difference between Docker images and Docker containers?

Answer:

A Docker image is a lightweight, stand-alone, executable software package that includes everything needed to run a piece of software, including the code, a runtime, libraries, environment variables, and config files.

A Docker container is a runtime instance of a Docker image. When you run an image, Docker creates a container from that image. Containers are isolated from each other and bundle their own software, libraries, and system tools, enabling you to run your applications securely in any environment.

In short, Docker images are the building blocks, and Docker containers are the environment in which those blocks run.

4. Describe the Docker build process, including the role of Dockerfiles and caching mechanisms.

Answer:

The Docker build process involves the following steps:

Write a Dockerfile. This text document contains all the commands needed to assemble an image. It’s the blueprint for creating Docker images.

Run the docker build command, which takes the Dockerfile and builds an image based on the instructions specified.

Each instruction in the Dockerfile creates a new layer in the image. These layers are stacked on top of each other to create the final image.

Dockerfile instructions include specifying a base image (FROM), setting the author of the image (LABEL), running commands (RUN), adding files or directories into the image (ADD) or COPY), exposing ports for inter-container communication (EXPOSE), and choosing what command to run when the container is launched from the image (CMD or ENTRYPOINT).

Docker uses a caching mechanism to speed up the build process. When Docker builds an image, it caches the result for each Dockerfile line. If you build again and the file hasn’t changed, Docker reuses the cached layers rather than rebuilding them, making the process faster.

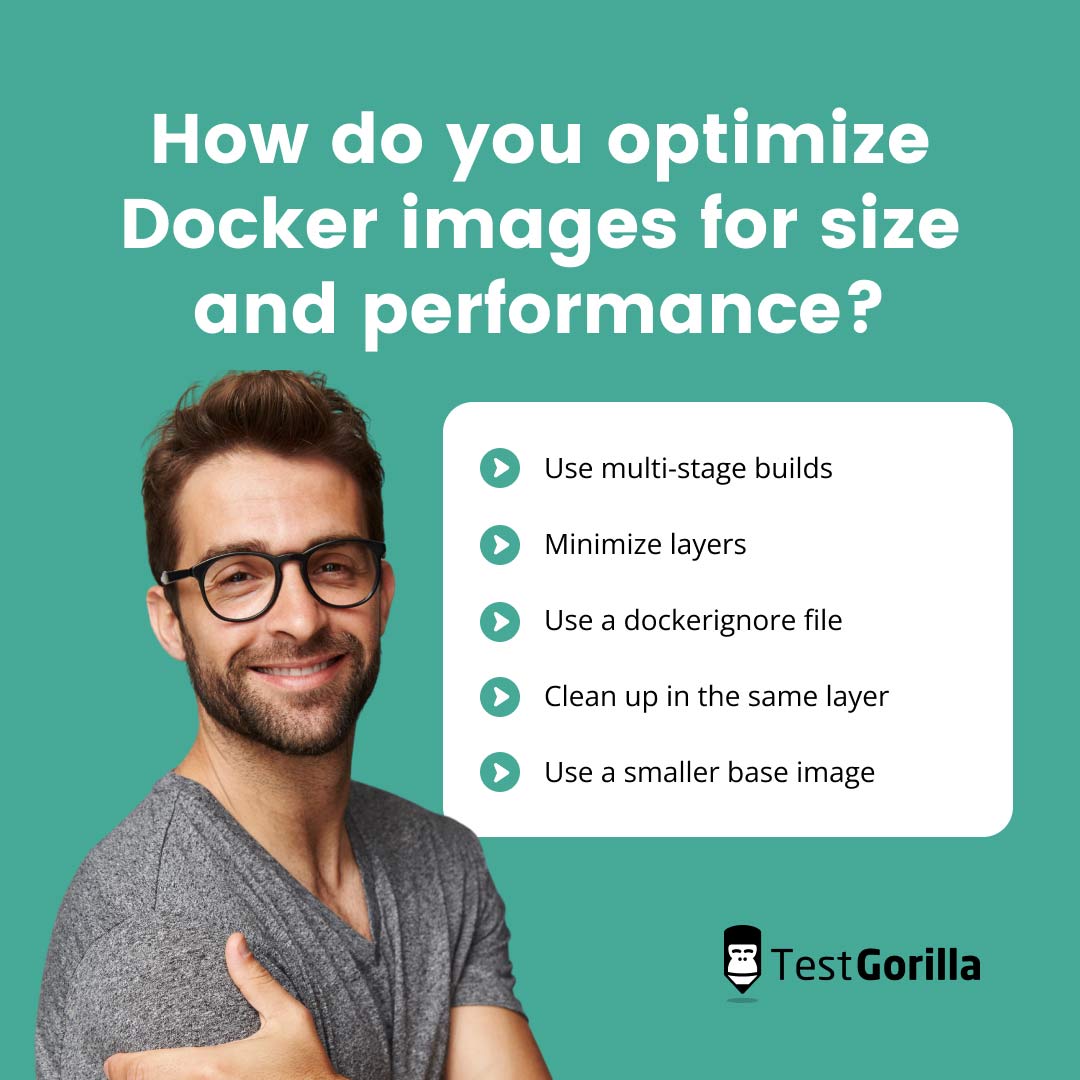

5. How do you optimize Docker images for size and performance?

Answer:

Optimizing Docker images for size and performance is essential for your applications to run and scale efficiently. There are several techniques you can use to optimize Docker images.

Use multi-stage builds: You can use one Docker image to build your application and a different, lighter image to run it. The final image doesn’t carry the baggage of the build tools and intermediate artifacts, reducing its size.

Minimize layers: Each RUN, COPY, or ADD command creates a new layer in your image. Combine these commands where possible to reduce the number of layers, using chaining commands (&&) in a single RUN instruction.

Use a dockerignore file: This file lets you specify files or directories that should be ignored when building an image, preventing unnecessary or sensitive data from being included.

Clean up in the same layer: Remove unnecessary files created by package managers within the same Dockerfile command.

Use a smaller base image: Instead of using a full-fledged OS as your base image, consider using smaller, more focused base images to reduce your image size significantly.

6. Explain the concept of Docker networking and discuss the various network drivers available in Docker.

Answer:

Docker networking allows containers to communicate with each other and with other systems.

Bridge: This is the default network driver for a container. If you don’t specify a driver, Docker creates a bridge network for the container by default. This provides a private internal network on the host where containers are attached, which can facilitate communication between them.

Host: The container’s network stack isn’t isolated from the Docker host if you use the host network driver. The container directly uses the networking of the Docker host. It’s faster than the bridge driver, but the container exposes its ports directly to the outside world.

Overlay: This driver is used in Docker Swarm to enable swarm services to communicate with each other. It’s ideal for distributed networks across multiple hosts.

MacVLAN: This driver assigns a MAC address to containers to make them appear as physical devices on the network. The Docker daemon routes traffic between containers and external networks.

None: This driver disables all networking for the container.

7. What are Docker volumes, and how do they enable data persistence across containers?

Answer:

Docker volumes are the preferred mechanism for persisting data generated and used by Docker containers.

By default, Docker containers are stateless. When a container is deleted, all the changes made to its file system are lost. Docker volumes solve the problem of persisting data in Docker containers.

With Docker volumes, the data lives outside the containers and is stored on the host system. This makes the data persistent and independent of the life cycle of containers. The data in Docker volumes can be safely shared among multiple containers. Even if a container is deleted, the volume and its data will still exist.

8. How can you secure Docker containers and protect them from potential vulnerabilities?

Answer:

Multiple best practices are required to ensure the security of Docker containers.

Use trusted and minimal base images to reduce vulnerabilities.

Always pull Docker images from trusted sources, like Docker’s official repository.

Apply the principle of least privilege. Run containers with minimal permissions.

Avoid running containers or services as root when possible.

Keep the Docker environment up to date.

Secure communication between containers and outside networks. Use TLS for encryption if necessary.

Implement security scanning and monitoring tools for early vulnerability detection and prompt response.

Conduct regular security audits and reviews.

9. What is Docker Compose, and how does it simplify multi-container application deployments?

Answer:

Docker Compose is a tool that simplifies managing and deploying multi-container Docker applications.

With Compose, you can define your multi-container application using a YAML file where you specify services, networks, and volumes. Each service corresponds to a container. You can specify the Docker image, environment variables, network, and volume it should use.

The power of Docker Compose comes when deploying a multi-container application. Instead of running each container separately and manually connecting them, you just need to type docker-compose up.

This command will create the networks, volumes, and services as defined in your Compose file, dramatically simplifying the process and reducing the potential for human error.

10. Describe the role of Docker Swarm and Kubernetes in managing container orchestration.

Answer:

Docker Swarm and Kubernetes are two popular container orchestration tools. They manage the lifecycle of containers and provide features for scaling, fail-over, deployment patterns, and more.

Docker Swarm is a native clustering and scheduling tool for Docker. It turns a pool of Docker hosts into a single, virtual Docker host, enabling you to create a swarm of Docker nodes and deploy services to them. It provides features like service discovery, load balancing, secure communication between nodes, and desired state management.

Kubernetes, often abbreviated to K8s, is a container orchestration platform maintained by the Cloud Native Computing Foundation (CNCF). It’s more feature-rich than Docker Swarm, providing advanced features like auto-scaling, rollouts and rollbacks, batch execution, secrets and configuration management, and storage orchestration.

While Docker Swarm is simpler to set up and integrates smoothly with Docker, Kubernetes has a larger community and is more versatile, orchestrating not just Docker but also other container runtimes.

11. Explain the differences between a Docker registry and a Docker repository.

Answer:

A Docker registry is a storage and distribution system for named Docker images. The Docker Hub and Google Container Registry are examples of public registries, but you can also have private ones.

A Docker repository is a collection of different Docker images with the same name, distinguished by different tags. For example, in a repository called “debian”, you might have the tags 10, 11, and 12, each corresponding to a different image of the Debian operating system.

12. Describe the steps involved in upgrading or rolling back a Docker container to a different version.

Answer:

Upgrading or rolling back a Docker container involves replacing the existing container with a new one running a different image version. Here are the steps to do it.

Pull the new version of the image. Use docker pull to download the new version of the image from the Docker registry.

Stop and remove the existing container. Use docker stop to stop the running container and docker rm to remove it. You should store your persistent data in volumes or bind mounts so you don’t lose it when the container is removed.

Run the new container. Use docker run to start a new container from the new image version. Connect it to the network and volumes. Set the environment variables.

Tools like Docker Compose, Docker Swarm, or Kubernetes provide mechanisms for rolling updates that let you upgrade containers with zero downtime.

13. What are the benefits and use cases of using multi-stage builds in Docker?

Answer:

A multi-stage build in Docker involves multiple Dockerfile FROM instructions, each starting a new stage. Intermediate stages can produce artifacts like binaries or compiled code, which can be copied into later stages. Intermediate layers are left behind, reducing the final image size.

Multi-stage builds can help you create lean, secure, and efficient Docker images.

Use cases for multi-stage builds include:

Minimizing image size: You can use a large image with all the necessary build tools in the first stage to compile your application and then copy the compiled application into a smaller base image in a later stage.

Improving security: The final image can be kept minimal, reducing the attack surface. It doesn’t need to include the build tools and source code used in earlier stages.

Separating build-time and runtime dependencies: Different stages can have different dependencies, preventing unnecessary libraries from ending up in your final image.

Speeding up builds: If a stage doesn’t change often, Docker can use cached layers for that stage, speeding up the build process.

14. Discuss the impact of using the --privileged flag in Docker and why it should be used with caution.

Answer:

The --privileged flag in Docker is a powerful option that gives a container almost the same privileges as the host machine. This means the container can access and manipulate the host’s system resources, device drivers, and kernel modules, which could expose the host to security risks.

You should attempt to avoid using the --privileged flag because it overrides the security measures provided by Docker’s containerization model. It essentially defeats the purpose of isolating the application’s process and environment from the host system.

Instead of using the --privileged flag, a better practice is to use Linux capabilities, seccomp profiles, or AppArmor profiles to grant necessary privileges to a container on a granular level.

15. How does Docker handle resource limitations and isolation, such as CPU, memory, and I/O?

Answer:

Docker provides several mechanisms for limiting and isolating resources in a container, such as CPU, memory, and I/O.

CPU: Docker enables you to limit CPU usage by assigning a specific number of CPU shares to a container or specifying which CPUs or CPU cores a container can use. You can do this with the --cpu-shares, --cpuset-cpus, and --cpu-period options.

Memory: You can limit a container’s memory usage with the --memory option, which takes a memory size in bytes, kilobytes, megabytes, or gigabytes. Docker also enables setting a memory reservation limit with the --memory-reservation option.

I/O: Docker uses the I/O scheduler of the host operating system to manage I/O for containers. It also supports block I/O weight and limits with the --blkio-weight, --device-read-bps, and --device-write-bps options.

These resource isolation and limitation mechanisms help ensure that each container only uses its fair share of system resources and prevents one container from hogging resources and affecting the performance of others.

How to assess Docker specialists with tricky interview questions

It’s vital to assess Docker specialists from multiple angles during the hiring process. Deep technical understanding is important, but you must also consider each candidate’s problem-solving abilities, communication skills, and cultural fit for team collaboration.

Pairing tricky Docker questions with behavioral interview questions provides an excellent overview of your candidates. TestGorilla offers one-way video interviews as a convenient prescreening tool to incorporate into your hiring campaign.

Interviews should be part of a multi-measure candidate testing process. TestGorilla’s extensive library offers a Docker test to assess candidates’ Docker knowledge, as well as personality and motivation tests like the 16-Types and DISC test, which provide insights into a candidate’s behavioral traits.

Combining these tests into a customized assessment tailored to your company’s unique needs gives you a complete view of a candidate’s skills, helping you make more informed hiring decisions.

How TestGorilla can help you find the best Docker specialists

When hiring Docker specialists, it’s crucial to assess their technical skills and behavioral traits. The tricky Docker interview questions listed above are excellent for evaluating technical knowledge, but you should use them as part of a comprehensive assessment process.

By leveraging TestGorilla’s vast array of tests, including the Docker test, you can thoroughly understand each candidate’s technical skills, behavioral traits, and cultural fit.

In addition, TestGorilla’s customizable assessments, detailed reports, and one-way interviews provide a well-rounded view of each candidate for informed hiring decisions.

TestGorilla’s platform is intuitive and easy to use. Check out our product tour or schedule a free 30-minute live demo.

If you prefer to explore the platform at your own pace, you can sign up for TestGorilla’s Free plan and start creating your own customized Docker assessments today.

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.