An expert’s guide to market research analyst interview questions

One of the best interview questions I’ve been asked centered on pizza-parlor scenario: What would I need to know about the local area and clientele to make smart business decisions? It was playful but spot-on for that strategy consulting role built on market research analysis.

Market research analysts need that same mix of technical, commercial, and communication skills – so generic interview questions like “Tell me about a challenging project” won’t cut it.

Combining my professional experience and insights from experts, I’m here to share strong market research analyst interview questions – and show you how assessments like TestGorilla’s Market Research test can make your interviews more powerful.

What does a market research analyst actually do?

I’ve noticed that lots of online content conflates market research analysis with marketing. But marketing is just one of many use cases for this role. Market research analysts can work with and across marketing, sales, product development, business intelligence, finance, commercial, and more.

The job has three key components: gathering, interpreting, and communicating data on a specific market. An analyst might, for example, survey consumer retail preferences in a new geographical market or conduct competitor pricing analysis for an upcoming product launch.

Market research analysts conduct and analyze the results of:

Primary research (e.g., from surveys, questionnaires, interviews, and focus groups).

Secondary research (e.g., from published reports, studies, and publicly or privately available data).

The aim of this work is to reveal objective insights that support top-level business goals.

Market research analyst interview questions: What the experts ask

Now, there’s no golden-egg basket of questions you must ask when hiring market research analysts. Rather, certain interview angles help reveal critical skills and traits.

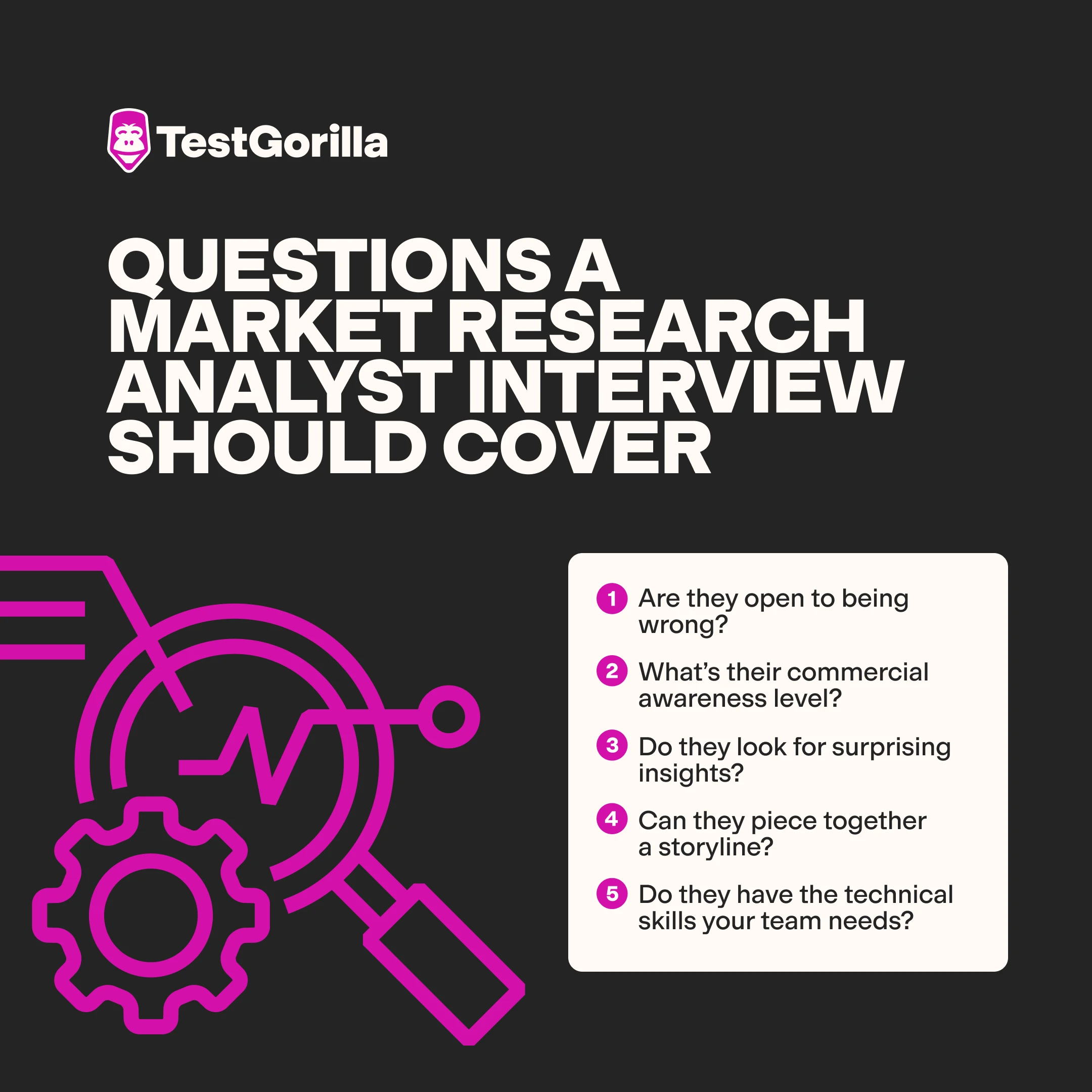

Broadly, your questions should aim to uncover:

Are they open to being wrong?

What’s their commercial awareness level?

Do they look for surprising insights?

Can they piece together a storyline?

Do they have the technical skills your team needs?

Are they open to being wrong?

Working in consultancy, I learned that you have to go into market research with hypotheses, not preconceptions. Here’s the difference:

A hypothesis is something you test – e.g., “students prefer Thursday lectures.” You collect and objectively assess data to see whether you’re wrong or right.

A preconception is something you assume – e.g., “students would rather have no lectures.” You might stick to that without ever testing it, or even after data proves it wrong.

Top research analysts must be open to being wrong – or at least uncertain – because that mindset keeps research findings accurate and actionable.

Analysts should also know how to handle confusing or unexpected results. If certain findings challenge their own or their team’s assumptions, skilled analysts double-check data collection methods for accuracy. They may also ask, “Why is that?” and dig into the data to identify root causes.

As Stephen Huber, President of Home Care Providers, tells TestGorilla, “Researchers who are unable or unwilling to entertain alternative explanations of challenging findings demonstrate an inflexibility that undermines confidence in the research.”

Huber tests candidates’ flexible thinking by presenting them with “conflicting data sets from [real] client demographics.” Strong candidates ask how the data was collected and “point out potential sampling biases or measurement errors that may account for differences between alternative data sources.” Underqualified candidates, meanwhile, tend to get defensive about their interpretations.

Huber isn’t alone. Jin Grey, CEO of Jin Grey SEO Ebooks, has a favorite interview question that gets to the same idea: “Can you think of an example from your previous work where data completely changed your first perception of either a campaign or audience insight?”

Great answers compare the original and revised insights and explain how candidates acted on that knowledge, such as by refining a product or campaign strategy.

Questions to ask

What do you think is the best way to analyze a data set that has gaps and inconsistencies?

Tell me about a time when your research findings challenged a pre-existing assumption about a market.

Imagine I give you two sets of survey responses from the same year, and the findings differ across several categories. How do you reconcile that?

What’s their commercial awareness level?

For Jason Vaught, SmashBrand’s Director of Content and Marketing, the biggest red flag in market research analysts is “speaking in theory, rather than providing practical business knowledge.”

As someone who’s analyzed purchasing patterns and factors impacting companies’ market share, I agree. Market researchers’ commercial acumen impacts how they collect, interpret, and present data. Or, as Vaught puts it, this skill takes market research from “academic” to insights that “make money.”

To test whether candidates bridge this gap, link a market research scenario to real-world outcomes using case studies or situational interview questions.

For example, Vaught puts interviewees in scenarios in which their research must support product development in packaging design. For him, great answers include commercial considerations such as “distribution, location of the stores, competition, and profit rates.”

Here’s another example from Steve Hulmes, analyst coach and owner of Sophic: A market researcher who stops at ”pure data analysis” might show that “sales for Product X have dropped by 10% over the last three months.” But a commercially aware analyst can correlate this revenue dip with “external factors such as new competitors entering the market” and suggest “potential responses, such as adjusting marketing strategies.”

Questions to ask

Tell me about a time when you had to persuade a team member to take a more commercial approach to data gathering and analysis.

Say we wanted to investigate why sales for the 18–25-year-old segment of our customer base dipped. How would you design your research around that objective?

Imagine we own a pizzeria and want to open a second location. Here are the sales figures for the first restaurant. [Present the data.] What do we need to know and estimate before we open a second venue?

Do they look for surprising insights?

Trish Van Veen, founder of Leg Up Consulting, shares a go-to interview question with TestGorilla – and it’s one I love:

“What is an example of an insight or outcome from a recent study that was surprising to you? And how did you come to uncover this insight?”

This question helps you spot candidates who think creatively, question common assumptions, and strive to understand why a result happened and what it means. As Van Veen puts it, they “push beyond the obvious” toward “beliefs that were not already known.”

For example, a candidate may describe discovering that consumers are spending less on retail because sustainability has become a higher priority than cost. How they tell you they uncovered that insight can reveal soft and technical skills. In this example, a combination of survey- and interview-based methodologies would suggest a thorough analysis.

In addition to being surprising, analysts’ insights should also be actionable – a business should be able to make strategic changes based on them. Dan Roche, Chief Marketing Officer at Workbooks, describes effective research as a “trifecta”: It should provide input for product development, content for sales enablement, and data for marketing plans.

Questions to ask

How do you make sure you draw clear, actionable insights from customer interviews?

Tell me about a time when a critical insight from your research led to a real-world change in your company.

Talk me through a market analysis project where you had to spot and analyze patterns in raw data. What was your process, and what did you discover?

Can they piece together a storyline?

In my time as a strategy consultant, much of my research work was client-facing. My mentor used to tell me that clients don’t just pay for the data – they pay for your recommendations. And to sell your recommendations, you need to tell a story.

Van Veen agrees. She says the best storytelling is “strategic, action-oriented, [and] relevant.” This means analysts need to outline the problem, the audience’s needs, the potential impact, and the recommended action when presenting findings.

On the flip side, Van Veen notes that presenting research findings “in the same form from which they were collected” – e.g., saying, “1,000 customers were surveyed, and 15% said they wouldn’t recommend the product” – shows “a lack of strategic or commercial awareness.” An alternative might be to say, “Most customers love our product, but we can do more to impress a pickier minority.”

To assess these storytelling skills in interviews, use scenario-based or case study questions. You can give candidates a data set before or during the interview, allow some time to prepare, and ask them to “sell” their data-based recommendations.

The best answers will include comparisons or anecdotes, outcome-focused reasoning, and engaging delivery, including gestures, eye contact, and visuals like charts and diagrams.

Questions to ask

Talk me through a time when you influenced a senior decision-maker using market data.

Here’s a dataset containing customer demographics and sales data for a home electronics store. [Present the data.] Build a story and business recommendations based on this data.

Imagine our sales director doesn’t want to change their approach based on your market analysis. What would you do?

Do they have the technical skills your team needs?

As Vaught explains to TestGorilla, “good design and marketing decisions are made on sound data.” In other words, the strength of your business outcomes depends on your analysts’ technical ability to collect and interpret that data. So, dedicate part of your interview to those technical “nuts and bolts.”

I recommend asking technical questions that relate to your current research or commercial challenges. You want a research analyst who can swoop in with experience that fits those needs – for example, running focus groups or interviews for a business-to-business (B2B) customer insights project.

Once you’ve identified your priorities, use these key areas to guide your questions:

Research design: Defining objectives and steps, choosing appropriate methods, and managing ethics and collaboration.

Data collection: Writing survey or interview questions, gathering secondary data, and ensuring data quality and consistency.

Data analysis: Using quantitative and qualitative models, identifying trends and actionable insights, and cleaning and correcting data.

Data visualization: Presenting findings through tables, charts, graphs, diagrams, or infographics.

Software skills: Working confidently with tools like SPSS, Stata, and Microsoft Excel.

That first pillar is a favorite of Workbooks Chief Marketing Officer Dan Roche, who tests candidates’ ability to write clear survey questions. “This ensures the answers are robust, as they are true to the intention of the responder,” he tells me. Roche also asks what “first three things they would do if they joined” to check what “they value most and are likely to be the best at.”

Meanwhile, Huber takes a more hands-on approach. For his healthcare services company, he gives candidates “incomplete client satisfaction surveys” and asks them to “identify missing variables.” Huber notes that top candidates will “ask questions about whether seasonal issues, changes in personnel, or events outside of the survey period may have driven answers.”

Questions to ask

Walk me through your process for designing a reliable and unbiased survey question.

Tell me about a time when you used an analytical model to identify correlations or causations. What challenges did you have to overcome?

Describe a time when your quantitative findings contradicted qualitative ones. How did you reconcile them?

How would you explain the concepts of data reliability and validity to a non-technical colleague?

→ Discover our guide on assessing market research analyst skills.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

How talent assessments take your interviews further

Hiring great market researcher analysts is expensive, and so is interviewing them. That’s why it’s so important to make every conversation count.

The right interview questions will help you identify strong talent, but combining them with skills-based talent assessments makes your interviews even more effective.

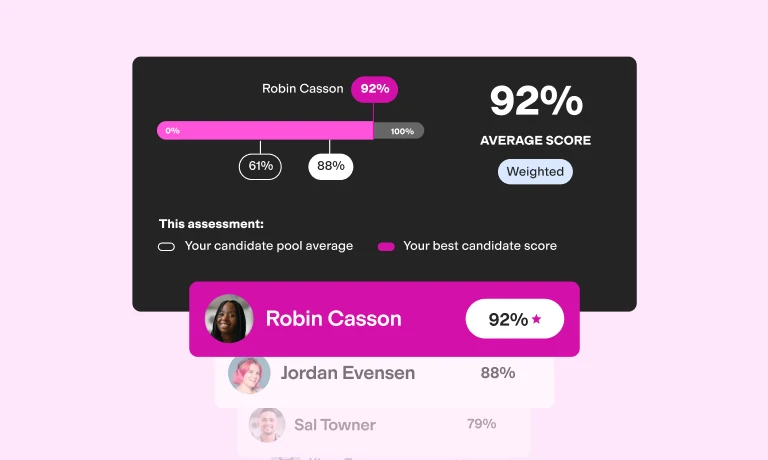

Include skills assessments early in your hiring process, before the interview stage. They help you identify candidates with the right abilities (like all the ones listed in your market research analyst job description), so your interviews focus only on those who’ve proven they can do the job.

TestGorilla makes this process straightforward. Its Market Research test measures essential skills, including research planning, data collection, and the use of quantitative and qualitative research methodologies. Each candidate is ranked by percentile, and you can review detailed results to see how they performed on individual answers.

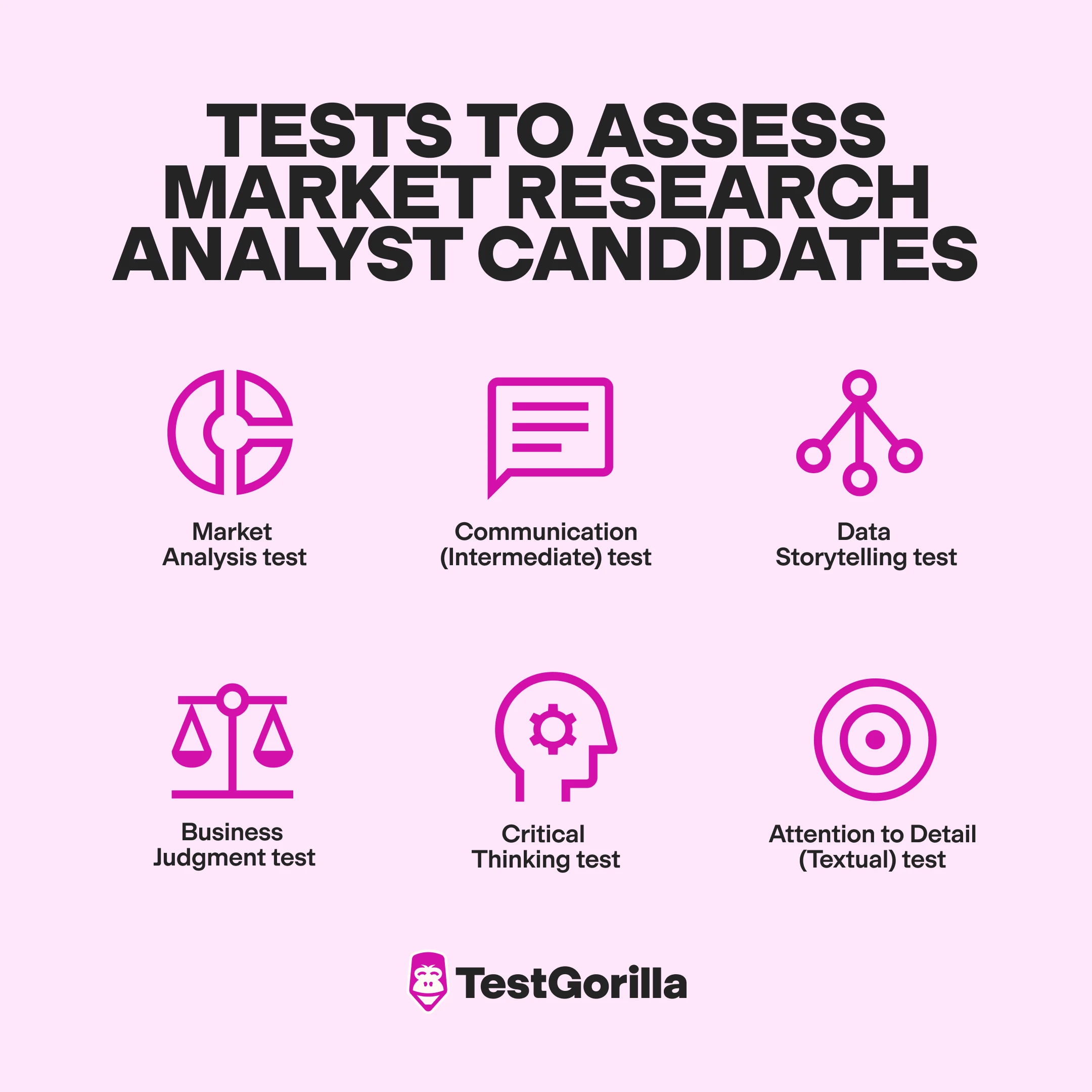

You can also pair the Market Research test with others that measure complementary skills for a multi-measure testing experience. Here are some options for your market research analyst candidates:

Communication (Intermediate Level) test

Data Storytelling test

Business Judgment test

Critical Thinking

Want to learn more about how TestGorilla can help you find the best market research analysts for your team? Sign up for a free demo, or get started ASAP with your free plan.

Contributors

Jin Grey, Jin Grey SEO Ebooks, CEO

Stephen Huber, Home Care Providers, President

Steve Hulmes, Sophic, Owner

Dan Roche, Workbooks, Chief Marketing Officer

Trish Van Veen, Leg Up Consulting, Founder

Jason Vaught, SmashBrand, Director of Content & Marketing

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.