47 Databricks interview questions to ask coding experts

Build your dream team of coding experts with TestGorilla

Databricks provides data engineering tools that help programmers and developers manage data processing and workflow scheduling.

These tools also benefit machine learning models, so software experts need to have experience using a web-based interface. You can find these professionals by giving them programming tests and engaging interview questions.

You can use the Working with Data test to determine whether candidates have the right skills and knowledge to handle large amounts of data using data engineering tools. This data-driven method also ensures you only interview expert candidates who know how to use commands properly.

So, do you want to hire a professional for your team? We have you covered – discover more than 45 Databricks interview questions and sample answers to help you hire a coding expert with plenty of experience.

Table of contents

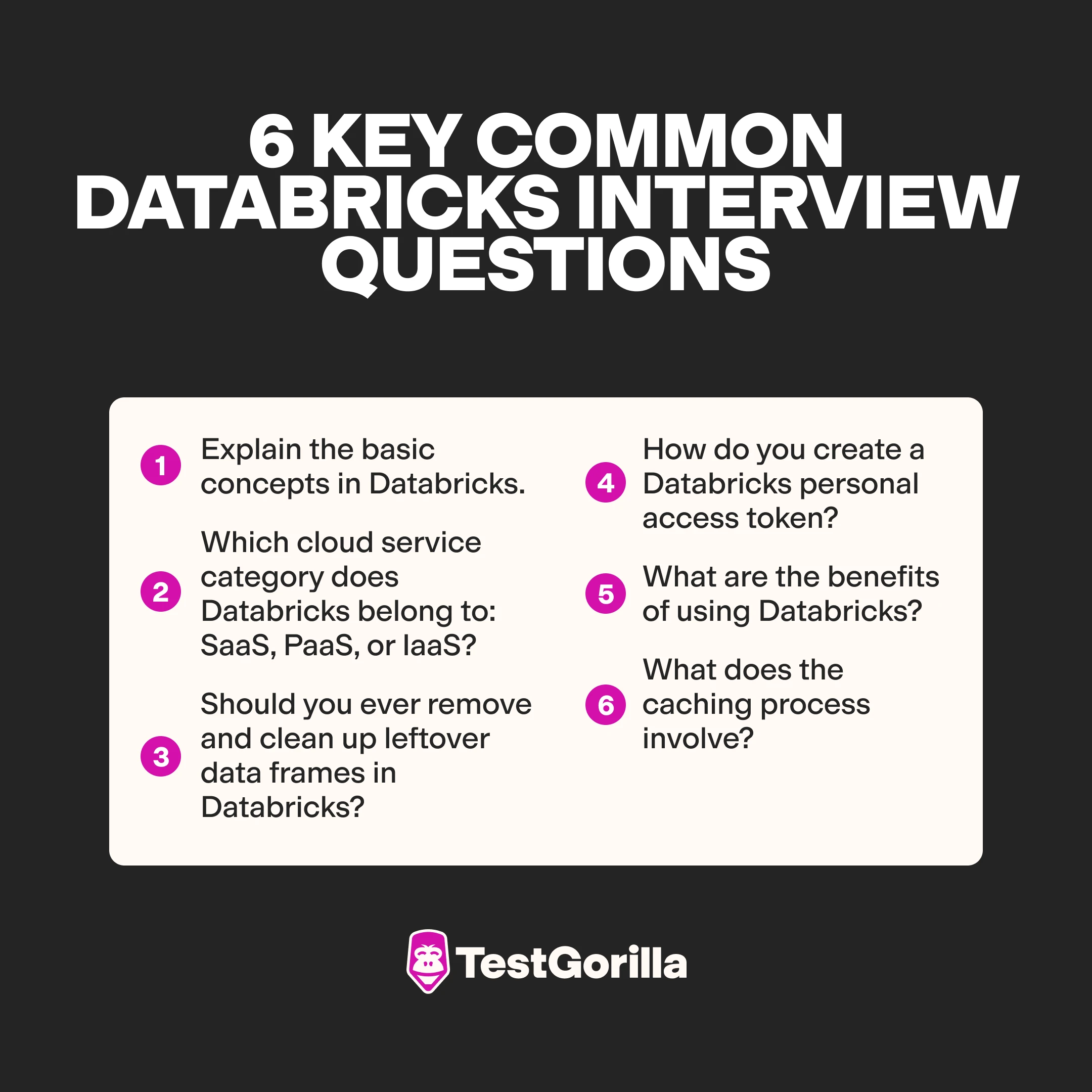

- 20 common Databricks interview questions to ask data engineering professionals

- 6 sample answers to key common Databricks interview questions

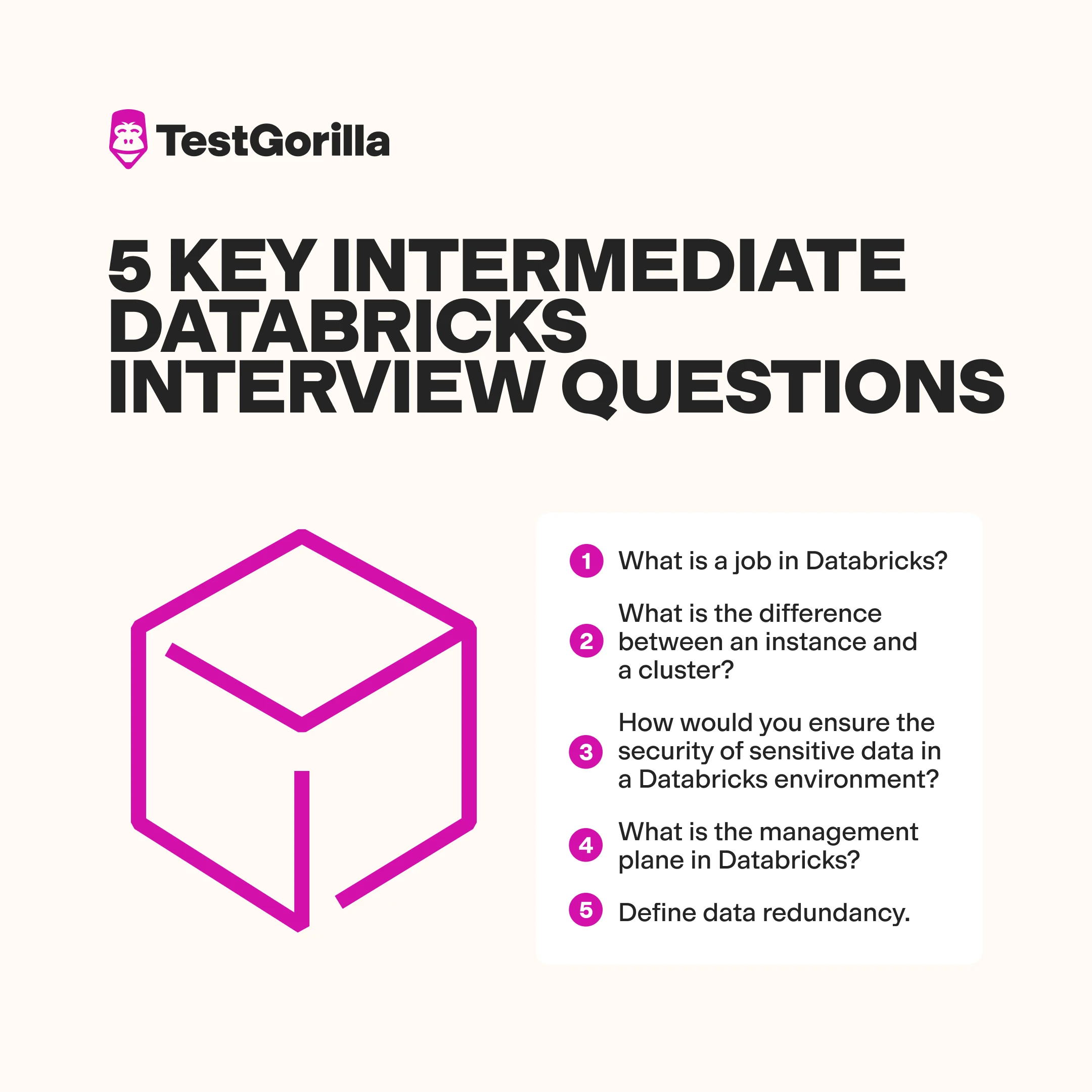

- 12 intermediate Databricks interview questions to ask your candidates

- 5 sample answers to key intermediate Databricks interview questions

- 15 challenging Databricks interview questions to ask experienced coders

- 5 sample answers to key challenging Databricks interview questions

- When should you use Databricks interview questions in your hiring process?

- Hire a coding expert using our skills tests and Databricks interview questions

20 common Databricks interview questions to ask data engineering professionals

Check out these 20 common Databricks interview questions to help you hire a data engineering professional for your company.

1. Explain the basic concepts in Databricks.

2. What does the caching process involve?

3. What are the different types of caching?

4. Should you ever remove and clean up leftover data frames in Databricks?

5. How do you create a Databricks personal access token?

6. What steps should you take to revoke a private access token?

7. What are the benefits of using Databricks?

8. Can you use Databricks along with Azure Notebooks?

9. Do you need to store an action’s outcome in a different variable?

10. What is autoscaling?

11. Can you run Databricks on private cloud infrastructure?

12. What are some issues you can face in Databricks?

13. Why is it necessary for us to use the DBU framework?

14. Explain what workspaces are in Databricks.

15. Is it possible to manage Databricks using PowerShell?

16. What is Kafka for?

17. What is a Delta table?

18. Which cloud service category does Databricks belong to: SaaS, PaaS, or IaaS?

19. Explain the differences between a control plane and a data plane.

20. What are widgets used for in Databricks?

6 sample answers to key common Databricks interview questions

To quickly assess your candidates’ responses, review these sample answers to common Databricks interview questions.

1. Explain the basic concepts in Databricks.

Databricks is a set of cloud-based data engineering tools that help process and convert large amounts of information. Programmers and developers can use these tools to enhance machine learning or stream data analytics.

With spending on cloud services expected to grow by 23% in 2023, candidates must understand what Databricks is and how it works.

Below are some of the main concepts in Databricks:

Accounts and workspaces

Databricks units (DBUs)

Data science and engineering

Dashboards and visualizations

Databricks interfaces

Authentication and authorization

Computation management

Machine learning

Data management

Send candidates a Data Science test to see what they know about machine learning, neural networks, and programming. Their test results will provide you with valuable insight into their knowledge of data engineering tools.

2. Which cloud service category does Databricks belong to: SaaS, PaaS, or IaaS?

Since a workspace in Databricks falls under the category of software, this programming environment is a software-as-a-service (SaaS). This means users can connect to and navigate cloud-based apps via the internet, making it a perfect web browser tool.

Coding professionals will have to manage their storage and deploy applications after adjusting their designs in Databricks. Therefore, it’s essential to hire a candidate who understands cloud computing.

3. Should you ever remove and clean up leftover data frames in Databricks?

The simple answer is no – unless the frames use cache. This is because the cache can eat up a large amount of data in the network’s bandwidth, so it’s better to eliminate datasets that involve cache but have no use in Databricks.

Your top candidates might also mention that deleting unused frames could reduce cloud storage costs and enhance the efficiency of data engineering tools.

4. How do you create a Databricks personal access token?

A personal access token is a string of characters that authenticate users who try to access a system. This type of authentication is scalable and efficient because websites can verify users without slowing down.

Candidates should have some experience with creating access tokens. Look for skilled applicants with strong programming skills who can describe the following steps:

Click the user profile icon from the Databricks desktop

Choose “User Settings” and click the “Access Tokens” tab

A button labeled “Generate New Token” should appear

Make sure to click the new token to create a private feature

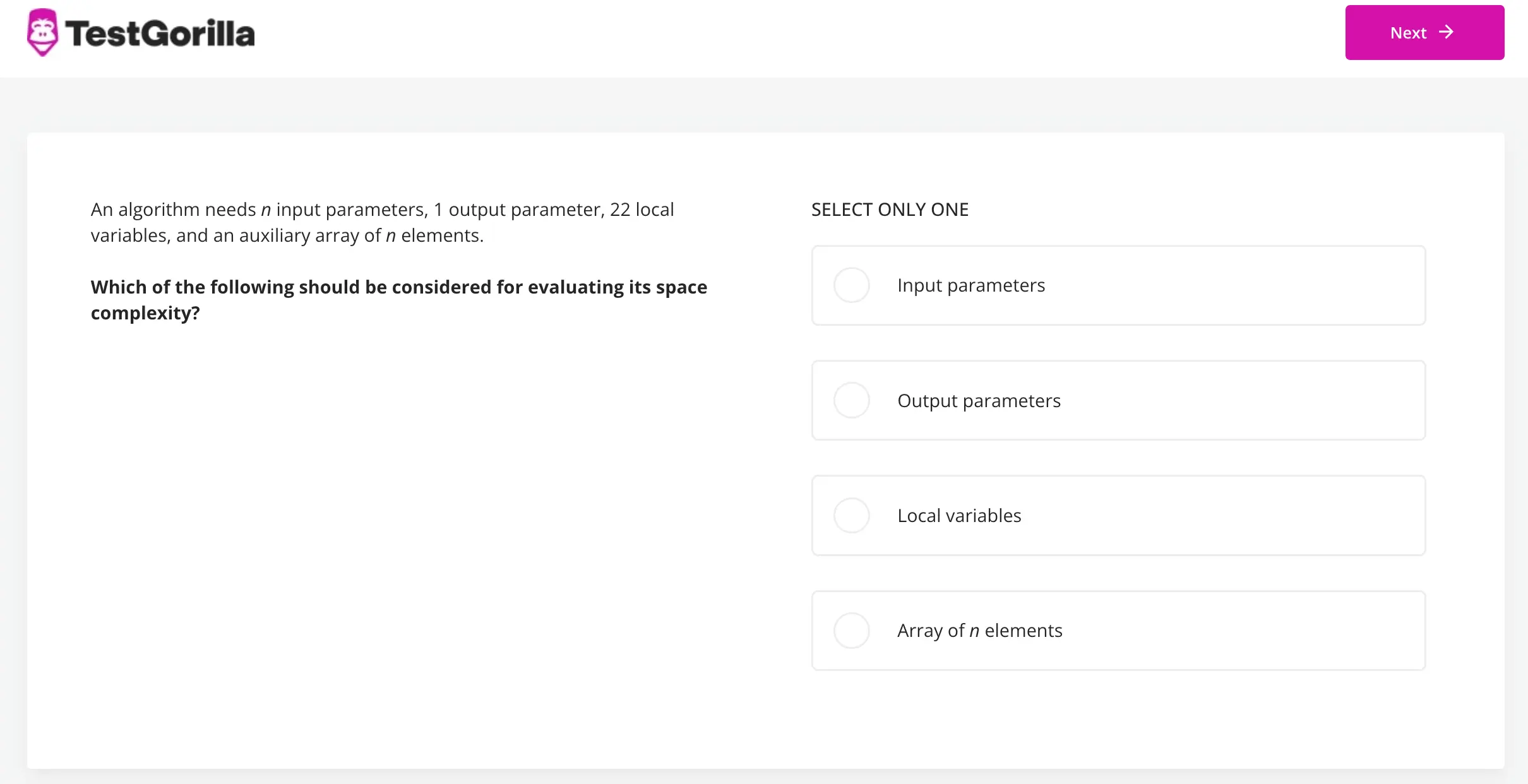

Use a Software Engineer test(preview below) to determine whether candidates can use a programming language and understand the fundamental concepts of computer science.

5. What are the benefits of using Databricks?

Candidates who have experience with Databricks should know about its many uses and benefits. Since it has flexible and powerful data engineering tools, it can help programmers and developers create the best processing frameworks.

Some top benefits include the following:

Familiar languages and environment: Databricks integrates with programming languages such as Python, R, and SQL, making it versatile software for all programmers.

Extensive documentation: This powerful software provides detailed instructions on how to reference information and connect to third-party applications. Its extensive support and documentation mean users won’t struggle to navigate the data engineering tools.

Advanced modeling and machine learning: One reason for using Databricks is its ability to enhance machine learning models. This enables programmers and developers to focus on generating high-quality data and algorithms.

Big data processing: The data engineering tools can handle large amounts of data, meaning users don’t have to worry about slow processing.

Spark cluster creation process: Programmers can use spark clusters to manage processes and complete tasks in Databricks. A spark cluster usually comprises driver programs, worker nodes, and cluster managers.

Send candidates a Microsoft SQL Server test to determine whether they can navigate a database management system when using Databricks.

6. What does the caching process involve?

Caching is a process that stores copies of important data in temporary storage. This lets users access this data quickly and efficiently on a website or platform. The high-speed data storage layer enables web browsers to cache HTML files, JavaScript, and images to load content faster.

Candidates should understand the functions of caching. This process is common in Databricks, so look out for applicants who can store data and copy files.

12 intermediate Databricks interview questions to ask your candidates

Use these 12 intermediate Databricks interview questions to test your candidates’ knowledge of data engineering and processing.

1. What are the major features of Databricks?

2. What is the difference between an instance and a cluster?

3. Name some of the key use cases of Kafka in Databricks.

4. How would you use Databricks to process big data?

5. Give an example of a data analysis project you’ve worked on.

6. How would you ensure the security of sensitive data in a Databricks environment?

7. What is the management plane in Databricks?

8. How do you import third-party JARs or dependencies in Databricks?

9. Define data redundancy.

10. What is a job in Databricks?

11. How do you capture streaming data in Databricks?

12. How can you connect your ADB cluster to your favorite IDE?

5 sample answers to key intermediate Databricks interview questions

Compare your candidates’ responses with these sample answers to gauge their level of expertise using Databricks.

1. What is a job in Databricks?

A job in Databricks is a way to manage your data processing and applications in a workspace. It can consist of one task or be a multi-task workflow that relies on complex dependencies.

Databricks does most of the work by monitoring clusters, reporting errors, and completing task orchestration. The easy-to-use scheduling system enables programmers to keep jobs running without having to move data to different locations.

2. What is the difference between an instance and a cluster?

An instance represents a single virtual machine used to run an application or service. A cluster refers to a set of instances that work together to provide a higher level of performance or scalability for an application or service.

Checking if candidates have this knowledge isn’t complicated when you use the right assessment methods. Use a Machine Learning test to find out more about candidates’ experience using software applications and networking resources. This also gives your job applicants a chance to show how they would manage large amounts of data.

3. How would you ensure the security of sensitive data in a Databricks environment?

Databricks has network protections that help users secure information in a workspace environment. This process prevents sensitive data from getting lost or ending up in the wrong storage system.

To ensure proper security, the user can access IP lists to show the network location of important information in Databricks. Then they should restrict outbound network access using a virtual private cloud.

4. What is the management plane in Databricks?

The management plane is a set of tools and services used to manage and control the Databricks environment. It includes the Databricks workplace, which provides a web-based interface for managing data, notebooks, and clusters. It also offers security, compliance, and governance features.

Send candidates a Cloud System Administration test to assess their networking capabilities. You can also use this test to learn more about their knowledge of computer infrastructure.

5. Define data redundancy.

Data redundancy occurs when the same data is stored in multiple locations in the same database or dataset. Redundancy should be minimized since it is usually unnecessary and can lead to inconsistencies and inefficiencies. Therefore, it’s usually best to identify and remove redundancies to avoid using up storage space.

15 challenging Databricks interview questions to ask experienced coders

Below is a list of 15 challenging Databricks interview questions to ask expert candidates. Choose questions that will help you learn more about their programming knowledge and experience using data analytics.

1. What is a Databricks cluster?

2. Describe a dataflow map.

3. List the stages of a CI/CD pipeline.

4. What are the different applications for Databricks table storage?

5. Define serverless data processing.

6. How will you handle Databricks code while you work with Git or TFS in a team?

7. Write the syntax to connect the Azure storage account and Databricks.

8. Explain the difference between data analytics workloads and data engineering workloads.

9. What do you know about SQL pools?

10. What is a Recovery Services Vault?

11. Can you cancel an ongoing job in Databricks?

12. Name some rules of a secret scope.

13. Write the syntax to delete the IP access list.

14. How do you set up a DEV environment in Databricks?

15. What can you accomplish using APIs?

5 sample answers to key challenging Databricks interview questions

Revisit these sample answers to challenging Databricks interview questions when choosing a candidate to fill your open position.

1. Define serverless data processing.

Serverless data processing is a way to process data without needing to worry about the underlying infrastructure. You can save time and reduce costs by having a service like Databricks manage the infrastructure and allocate resources as needed.

Databricks can provide the necessary resources on demand and scale them as needed to simplify the management of data processing infrastructure.

2. How would you handle Databricks code while working with Git or TFS in a team?

Global information tracker (Git) and Team Foundation Server (TFS) are version control systems that help programmers manage code. TFS cannot be used in Databricks because the software doesn’t support it. Therefore, programmers can only use Git when working on a repository system.

Candidates should also know that Git is an open-source, distributed version control system, whereas TFS is a centralized version control system offered by Microsoft.

Since Databricks integrates with Git, data engineers and programmers can easily manage code without constantly updating the software or reducing storage because of low capacity.

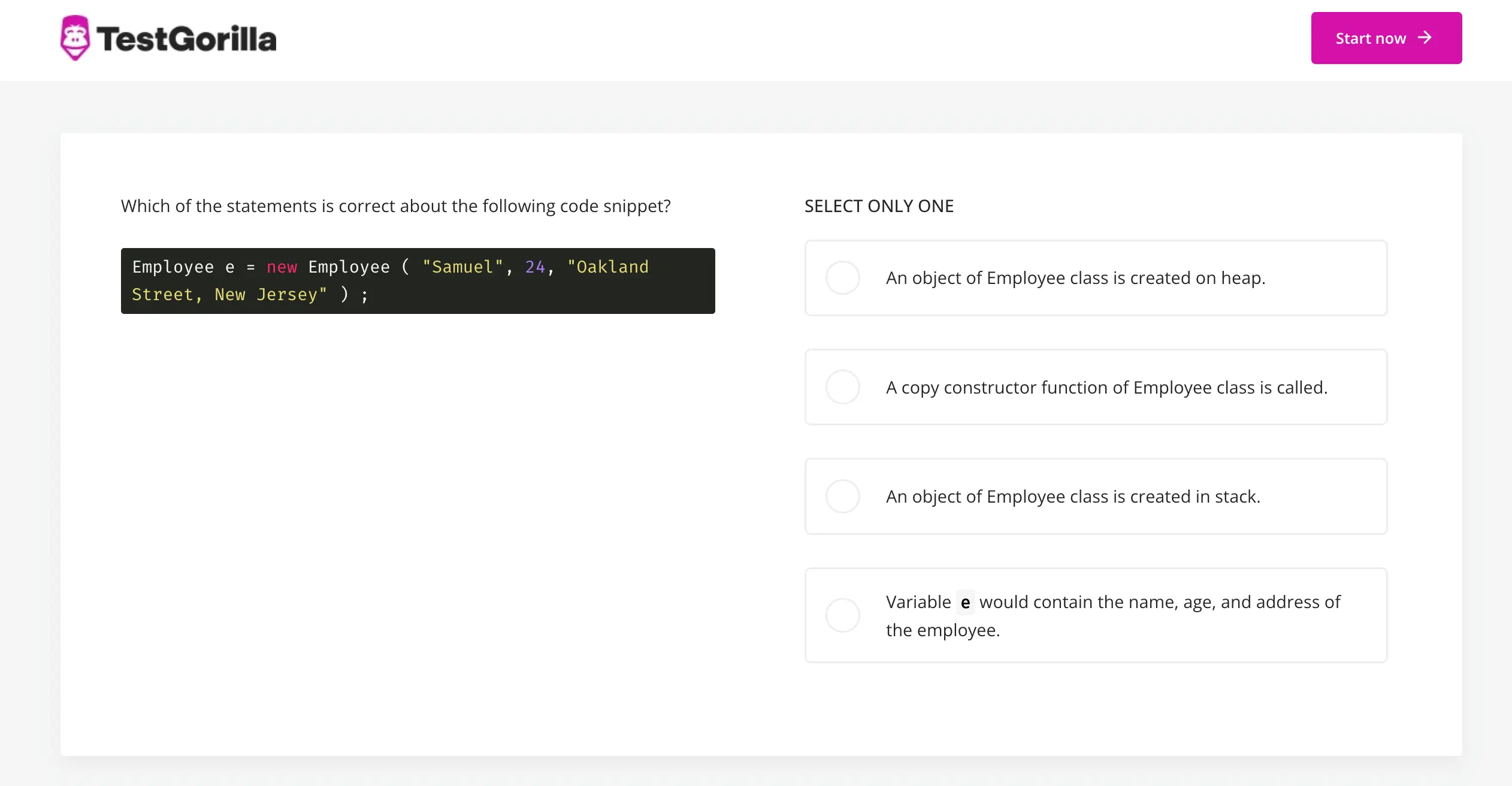

The Git skills test(preview below) can help you choose candidates who are well versed in this open-source tool. It also gives them an opportunity to prove their ability to manage data analytics projects and source code.

3. Explain the difference between data analytics workloads and data engineering workloads.

Data analytics workloads involve obtaining insights, trends, and patterns from data. Meanwhile, data engineering workloads involve building and maintaining the infrastructure needed to store, process, and manage data.

4. Name some rules of a secret scope in Databricks.

A secret scope is a collection of secrets identified by a name. Programmers and developers can use this feature to store and manage sensitive information, including secret identities or application programming interface (API) authentication information, while protecting it from unauthorized access.

One rule candidates could mention is that a Databricks workspace can only hold a maximum of 100 secret scopes.

You can send candidates a REST API test to see how they manage data and create scopes for an API. This test also determines whether candidates can deal with errors and security considerations.

5. What is a Recovery Services vault?

A recovery services vault is an Azure management function that performs backup-related operations. It enables users to restore important information and copy data to adhere to backup regulations. The service can also help users arrange data in a more organized and manageable way.

When should you use Databricks interview questions in your hiring process?

You should use Databricks interview questions after sending candidates skills tests. Pre-employment screening will help you quickly narrow down your list of candidates. A skills test determines whether the job applicant has the required skills and knowledge to complete specific tasks.

For example, you can send candidates a Clean Code test to ensure they have strong coding skills and can follow software design principles. To learn more about applicants’ personalities, consider using the 16 Types personality test to gain insight into their job preferences and decision-making process.

Always remember to use skills assessments that relate to your open position. For a role that relies on Databricks, it’s better to focus on programming skills, situational judgment, language skills, and cognitive abilities.

Start building your developer assessment with TestGorilla

Hire a coding expert using our skills tests and Databricks interview questions

Now that you have some interview questions, where can you find relevant skills tests?

Search our test library to start building a skills assessment that suits your role. We have plenty of options that cover programming skills and language proficiency. Book a free 30-minute demo to learn more about our services, creating high-quality assessments, and enhancing your hiring process.

You can also take a product tour of our screening tools and custom tests. We believe that a positive candidate experience derives from a comprehensive recruitment strategy. So streamlining your hiring process using the best skills tests and interview questions is essential.

To hire a coding expert for your company, use our pre-employment assessments and Databricks interview questions.

Related posts

Hire the best candidates with TestGorilla

Create pre-employment assessments in minutes to screen candidates, save time, and hire the best talent.

Latest posts

The best advice in pre-employment testing, in your inbox.

No spam. Unsubscribe at any time.

Hire the best. No bias. No stress.

Our screening tests identify the best candidates and make your hiring decisions faster, easier, and bias-free.

Free resources

This checklist covers key features you should look for when choosing a skills testing platform

This resource will help you develop an onboarding checklist for new hires.

How to assess your candidates' attention to detail.

Learn how to get human resources certified through HRCI or SHRM.

Learn how you can improve the level of talent at your company.

Learn how CapitalT reduced hiring bias with online skills assessments.

Learn how to make the resume process more efficient and more effective.

Improve your hiring strategy with these 7 critical recruitment metrics.

Learn how Sukhi decreased time spent reviewing resumes by 83%!

Hire more efficiently with these hacks that 99% of recruiters aren't using.

Make a business case for diversity and inclusion initiatives with this data.