55 Snowflake interview questions (+ sample answers) to assess candidates’ data skills

If your organization’s teams are using Snowflake for their large-scale data analytics projects, you need to assess candidates’ proficiency with this tool quickly and efficiently.

What’s the best way to do this, though?

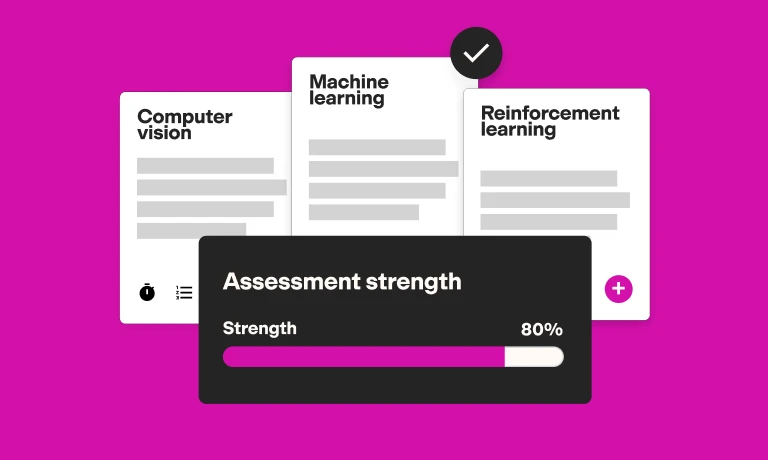

With the help of skills tests and interviews. Use our Snowflake test to make sure candidates have sufficient experience with data architecture and SQL. Combine it with other tests for an in-depth talent assessment and then invite those who perform best to an interview.

During interviews, ask candidates targeted Snowflake interview questions to further evaluate their skills and knowledge. Whether you’re hiring a database administrator, data engineer, or data analyst, this method will enable you to identify the best person for the role.

To help you prepare for the interviews, we’ve prepared a list of 55 Snowflake interview questions you can ask candidates, along with sample answers to 25 of them.

Top 25 Snowflake interview questions to evaluate applicants’ proficiency

In this section, you’ll find the best 25 interview questions to assess candidates’ Snowflake skills. We’ve also included sample answers, so that you know what to look for, even if you have no experience with Snowflake yourself.

1. What is Snowflake? How is it different from other data warehousing solutions?

Snowflake is a cloud-based data warehousing service for data storage, processing, and analysis.

Traditional data warehouses that often require significant hardware management and tuning. Contrary to that, Snowflake separates computing and storage. This means you can adjust computing power and storage based on your needs without impacting each other and without requiring costly hardware upgrades.

Snowflake supports multi-cloud environments, meaning that businesses can use different cloud providers and switch between them easily.

2. What are virtual warehouses in Snowflake? Why are they important?

Virtual warehouses in Snowflake are separate compute clusters that perform data processing tasks. Each virtual warehouse operates independently, which means they do not share compute resources with each other.

This architecture is crucial because it allows multiple users or teams to run queries simultaneously without affecting each other's performance. The ability to scale warehouses on demand enables users to manage and allocate resources based on their needs and reduce costs.

3. Explain the data sharing capabilities of Snowflake.

Snowflake's data sharing capabilities enable the secure and governed sharing of data in real time across different Snowflake accounts, without copying or transferring the data itself.

This is useful for organizations that need to collaborate with clients or external partners while maintaining strict governance and security protocols. Users can share entire databases and schemas, or specific tables and views.

4. What types of data can Snowflake store and process?

Snowflake is designed to handle a wide range of data types, from structured data to semi-structured data such as JSON, XML, and Avro.

Snowflake eliminates the need for a separate NoSQL database to handle semi-structured data, because it can natively ingest, store, and directly query semi-structured data using standard SQL. This simplifies the data architecture and enables analysts to work with different data types within the same platform.

5. What is Time Travel in Snowflake? How would you use it?

Time Travel in Snowflake allows users to access historical data at any point within a defined retention period and up to 90 days depending on your Snowflake Edition.

Candidates should point out that this is useful for recovering data that was accidentally modified or deleted and also for conducting audits on historical data. This minimizes human errors and guarantees the integrity of your data.

6. Explain how Snowflake processes queries using micro-partitions.

Snowflake automatically organizes table data into micro-partitions. Each micro-partition contains information about the data range, min/max values, and other metrics. Snowflake uses this metadata to eliminate unnecessary data scanning. This speeds up retrieval, reduces resource usage, and improves the performance of queries.

7. Describe a data modeling project you executed in Snowflake.

Here, expect candidates to discuss a recent Snowflake project in detail, talk about the schemas they used, challenges they encountered, and results they obtained.

For example, skilled candidates might explain how they used Snowflake to support a major data-analytics project, using star and snowflake schemas to organize data. They could mention how they optimized models for query performance and flexibility, using Snowflake’s capabilities to handle structured and semi-structured data.

8. How do you decide when to use a star schema versus a snowflake schema in your data warehouse design?

The choice between a star schema and a snowflake schema depends on the specific requirements of the project:

A star schema is simpler and offers better query performance because it minimizes the number of joins needed between tables. It’s effective for simpler or smaller datasets where query speed is crucial.

A snowflake schema involves more normalization which reduces data redundancy and can lead to storage efficiencies. This schema is ideal when the dataset is complex and requires more detailed analysis.

The decision depends on balancing the need for efficient data storage versus fast query performance.

9. Explain how to use Resource Monitors in Snowflake.

Resource Monitors track and control the consumption of compute resources, ensuring that usage stays within budgeted limits. They’re particularly useful in multi-team environments and users can set them up for specific warehouses or the entire account.

Candidates should explain that to use them, they would:

Define a resource monitor

Set credit or time-based limits

Specify actions for when resource usage reaches or exceeds those limits

Actions can include sending notifications, suspending virtual warehouses, or even shutting them down.

10. What are some methods to improve data loading performance in Snowflake?

There are several ways to improve data loading performance in Snowflake:

File sizing: Using larger files – within a 100 MB-250 MB range – reduces the overhead of handling many small files

File formats: Efficient, compressed, columnar file formats like Parquet or ORC enhance loading speeds

Concurrency: Running multiple COPY commands in parallel can maximize throughput

Remove unused columns: Pre-processing files to remove unnecessary columns before loading can reduce the volume of transferred and processed data

11. How would you scale warehouses dynamically based on load?

Candidates should mention Snowflake’s auto-scale feature, which automatically adjusts the number of clusters in a multi-cluster warehouse based on the current workload.

This feature allows Snowflake to add additional compute resources when there is an increase in demand, f.e. during heavy query loads, and to remove these resources when the demand decreases.

12. Explain the role of Streams and Tasks in Snowflake.

Streams in Snowflake capture data manipulation language (DML) changes to tables, such as INSERTs, UPDATEs, and DELETEs. This enables users to see the history of changes to data.

Tasks in Snowflake enable users to schedule automated SQL statements, often using the changes tracked by Streams.

Together, Streams and Tasks can automate and orchestrate incremental data processing workflows, such as transforming and loading data into target tables.

13. Can you integrate Snowflake with a data lake?

Yes, it’s possible to integrate Snowflake with a data lake. This typically involves using Snowflake’s external tables to query data stored in external storage solutions like Amazon S3, Azure Data Lake Storage, or Google Cloud Storage.

This setup enables users to leverage the scalability of data lakes for raw data storage while using the platform’s powerful computing capabilities for querying and analyzing the data without moving it into Snowflake.

14. How would you set up a data lake integration using Snowflake’s external tables?

Use this question to follow up on the previous one. Candidates should outline the following steps for the successful integration of a data lake with external tables:

Ensure data is stored in a supported cloud storage solution

Define an external stage in Snowflake that points to the location of the data-lake files

Create external tables that reference this stage

Specify the schema based on the data format (like JSON or Parquet)

This setup enables users to query the content of the data lake directly using SQL without importing data into Snowflake.

15. What are the encryption methods that Snowflake uses?

Snowflake uses multiple layers of encryption to secure data:

All data stored in Snowflake, including backups and metadata, is encrypted using AES-256 strong encryption

Snowflake manages encryption keys using a hierarchical key model, with each level of the hierarchy having a different rotation and scope policy

Snowflake supports customer-managed keys, where customers can control the key rotation and revocation policies independently

16. How would you audit data access in Snowflake?

Snowflake provides different tools for auditing data access:

The Access History function enables you to track who accessed what data and when

Snowflake’s role-based access control enables you to review and manage who has permission to access what data, further enhancing your auditing capabilities

Third-party tools and services can help monitor, log, and analyze access patterns

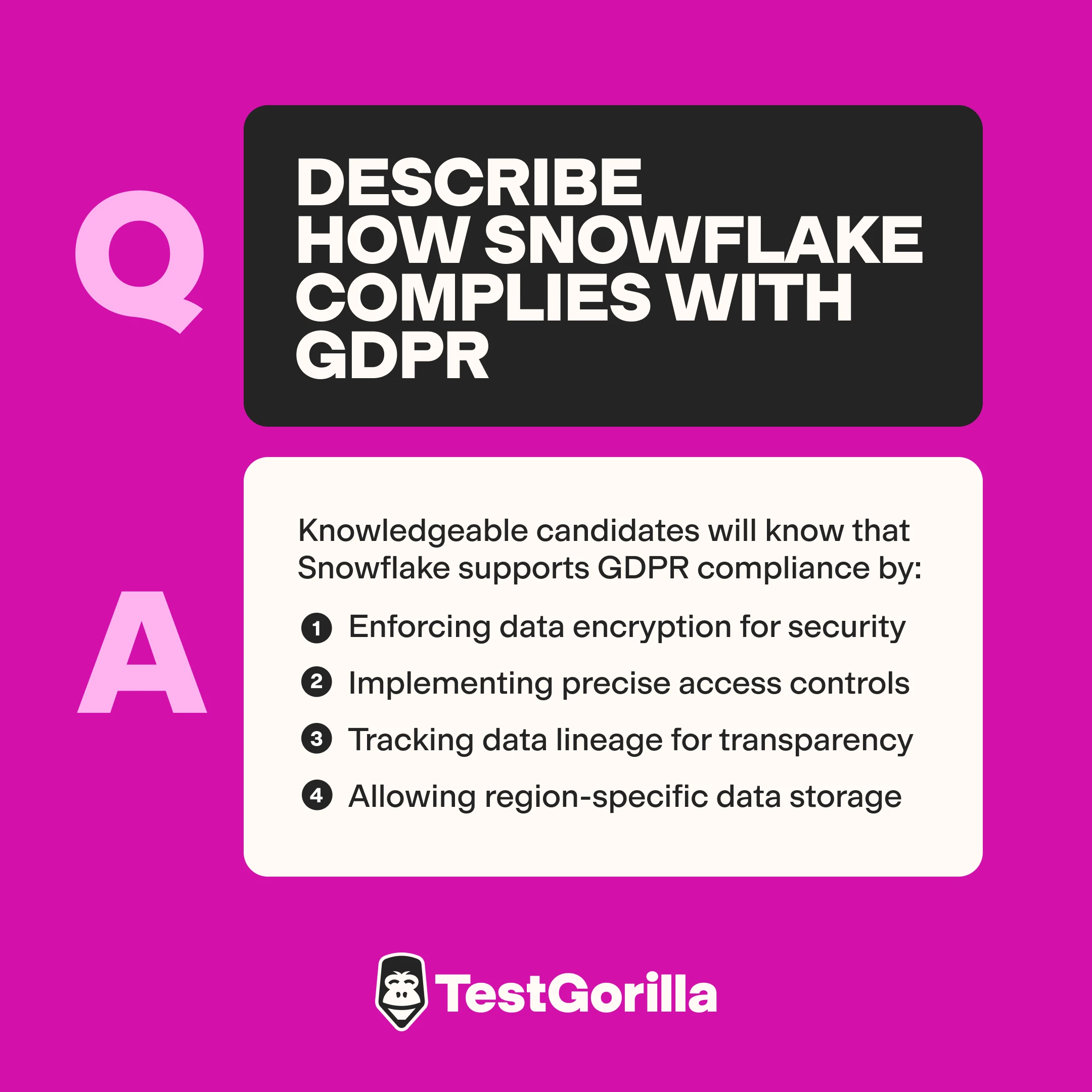

17. Describe how Snowflake complies with GDPR.

Knowledgeable candidates will know that Snowflake supports GDPR compliance by:

Ensuring data encryption at rest and in transit

Offering fine-grained access controls

Providing data-lineage features which are essential for tracking data processing

Enabling users to choose data storage in specific geographical regions to comply with data residency requirements

If you need to evaluate candidates’ GDPR knowledge, you can use our GDPR and Data Privacy test.

18. What was the most complex data workflow you automated using Snowflake?

Expect candidates to explain how they automated a complex workflow in the past; for example, they might have created an analytics platform for large-scale IoT data or a platform handling financial data.

Look for details on the challenges they faced and the specific ways they tackled them to process and transform the data. Which ones of Snowflake’s features did they use? What external integrations did they put in place? How did they share access with different users? How did they maintain data integrity?

19. How can you use Snowflake to support semi-structured data?

Snowflake is excellent at handling semi-structured data such as JSON, XML, and CSV files. It can ingest semi-structured data directly without requiring a predefined schema, storing this data in a VARIANT type column.

Data engineers can then use Snowflake’s powerful SQL engine to query and transform this data just like structured data.

This is particularly useful for cases where the data schema can evolve over time. Snowflake dynamically parses and identifies the structure when queried, simplifying data management and integration.

20. Explain how Snowflake manages data concurrency.

To manage data concurrency, Snowflake uses its multi-cluster architecture, where each virtual warehouse operates independently. This enables multiple queries to run simultaneously without a drop in the overall performance.

Additionally, Snowflake uses locking mechanisms and transaction isolation levels to ensure data integrity and consistency across all concurrent operations.

21. How do you configure and optimize Snowpipe for real-time data ingestion in Snowflake?

Snowpipe is Snowflake's service for continuously loading data as soon as it arrives in a cloud storage staging area.

To configure Snowpipe, you need to define a pipe object in Snowflake that specifies the source data files and the target table. To optimize it, you need to make sure that files are close to Snowflake’s recommended size (between 10 MB to 100 MB) and use auto-ingest, which triggers data loading automatically when new files are detected.

22. What are the implications of using large result sets in Snowflake?

Handling large result sets in Snowflake can lead to increased query execution times and higher compute costs.

To manage this effectively, data engineers can:

Reuse previously computed results through result set caching

Optimize query design to filter unnecessary data early in the process

Use approximate aggregation functions

Partition and cluster the data

23. What is the difference between using a COPY INTO command versus INSERT INTO for loading data into Snowflake?

Candidates should explain the following differences:

COPY INTO is best for the high-speed bulk loading of external data from files into a Snowflake table and for large volumes of data. It directly accesses files stored in cloud storage, like AWS S3 or Google Cloud Storage.

INSERT INTO is used for loading data row-by-row or from one table to another within Snowflake. It’s suitable for smaller datasets or in case the data is already in the database.

COPY INTO is generally much faster and cost-effective for large data loads compared to INSERT INTO.

24. How do you automate Snowflake operations using its REST API?

Automating Snowflake operations using its REST API involves programmatically sending HTTP requests to perform a variety of tasks such as executing SQL commands, managing database objects, and monitoring performance.

Users can integrate these API calls into custom scripts or applications to automate data loading, user management, and resource monitoring, for example.

25. What are some common APIs used with Snowflake?

Snowflake supports several APIs that enable integration and automation. Examples include:

REST API, enabling users to automate administrative tasks, such as managing users, roles, and warehouses, as well as executing SQL queries

JDBC and ODBC APIs, enabling users to connect traditional client applications to Snowflake and query and manipulate data from them

Use our Creating REST API test to evaluate candidates’ ability to follow generally accepted REST API standards and guidelines.

Extra 30 Snowflake interview questions you can ask candidates

Need more ideas? Below, you’ll find 30 additional interview questions you can use to assess applicants’ Snowflake skills.

Explain the concept of Clustering Keys in Snowflake.

How can you optimize SQL queries in Snowflake for better performance?

How do you ensure that your SQL queries are cost-efficient in Snowflake?

What are the best practices for data loading into Snowflake?

How do you perform data transformations in Snowflake?

How do you monitor and manage virtual warehouse performance in Snowflake?

What strategies would you use to manage compute costs in Snowflake?

Can you explain the importance of Continuous Data Protection in Snowflake?

How would you implement data governance in Snowflake?

What tools and techniques do you use for error handling and debugging in Snowflake?

How would you handle large scale data migrations to Snowflake?

What are Materialized Views in Snowflake and how do they differ from standard views?

Describe how you integrate Snowflake with external data sources.

How does Snowflake integrate with ETL tools?

What BI tools have you integrated with Snowflake and how?

How do you implement role-based access control in Snowflake?

What steps would you take to secure sensitive data in Snowflake?

Share an experience where you optimized a Snowflake environment for better performance.

Describe a challenging problem you solved using Snowflake.

How have you used Snowflake's scalability features in a project?

Can you describe a project where Snowflake significantly impacted business outcomes?

What challenges do you foresee in scaling data operations in Snowflake in the coming years?

What are your considerations when setting up failover and disaster recovery in Snowflake?

How would you use Snowflake for real-time analytics?

Explain how to use caching in Snowflake to improve query performance.

How do you manage and troubleshoot warehouse skew in Snowflake?

Describe the process of configuring data retention policies in Snowflake.

Explain how Snowflake's query compilation works and its impact on performance.

Describe how to implement end-to-end encryption in a Snowflake environment.

Provide an example of using Snowflake for a data science project involving large-scale datasets.

You can also use our interview questions for DBAs, data engineers, or data analysts.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

Use skills tests and structured interviews to hire the best data experts

To identify and hire the best data professionals, you need a robust recruitment funnel that lets you narrow down your list of candidates quickly and efficiently.

For this, you can use our Snowflake test, in combination with other skills tests. Then, you simply need to invite the best talent to an interview, where you ask them targeted Snowflake interview questions like the ones above to further assess their proficiency.

This skills-based approach to hiring yields better results than resume screening, according to the 85% of employers that are using it.

Sign up for a free live demo to chat with one of our team members and find out more about the benefits of skills-first hiring – or sign up for our Free forever plan to build your first assessment today.

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.