The expert’s guide to interview questions for data-driven decision-making

Your dashboard says traffic’s soaring. Your sales team says revenue’s tanking. Who do you trust?

Most interviews for analytical roles would never reveal the answer. That’s because too many interviewers focus questions on tool knowledge instead of true data judgment. But the best analysts don’t just crunch numbers. They challenge assumptions, filter out noise, and act with confidence when the numbers don’t line up.

We spoke with three leaders, and their insights reveal four principles you should test in your interview questions. We break them down below – and show you how starting with evaluations like our Data-Driven Decision Making test and Working with Data test helps make interviews more powerful.

What the experts focus on in interview questions for data-driven decision-making

Dwight Zähringer, Bill Berman, and Jonathan Nimmons have built teams that live and breathe data. They know what separates weak hires from strong ones – and how interviewers can surface them with the right questions.

Whether they’re hiring data engineers, data analysts, or other data-focused pros, here are the four principles they center their interviews around:

Principle 1: Distinguishing signal from noise

Twenty years of running digital campaigns taught Perfect Afternoon founder Dwight Zähringer that most interview questions miss the mark. “They test theoretical knowledge instead of real analytical instincts,” he tells TestGorilla.

That’s why, instead of hypotheticals, Zähringer gives candidates messy real data and watches how they react. This reveals more than any rehearsed answer can.

In one interview, he shared a client’s Google Ads account, which showed $12,000 spent over three months with a 2.1% conversion rate. Weak candidates would jump to surface-level fixes, such as A/B testing ad copy. Strong candidates ask sharper questions: “What’s the time lag between click and conversion? Are we tracking micro-conversions or just final sales?”

Zähringer applies the same test to organic data. When SEO traffic jumps 180% but leads fall 15%, weak candidates celebrate the traffic spike, while strong ones immediately want to segment by intent. “Ranking for ‘web design inspiration’ brings a very different visitor than ‘hire web designer Michigan.’ The candidates I keep always question the measurement framework first,” he says.

This mindset also shows up in lead quality. When Zähringer shows interviewees a HubSpot dashboard with 500 marketing-qualified leads but only 12 closed sales, weak candidates discuss ways to improve closing rates. Strong ones zoom out and ask whether the scoring model really predicts purchase intent, or if it’s just rewarding engagement clicks.

Dwight’s point is clear: If you hire the wrong analyst, you don’t just get bad answers based on face value analysis – you end up steering the business on illusions.

Questions to ask in interviews

You inherit a dataset showing record-breaking traffic but falling conversions. What’s your first move?

Your dashboard shows 500 marketing qualified leads but only 12 sales. Where do you start?

A campaign’s click-through rate doubled, but revenue is flat. How do you audit the measurement framework?

Principle 2: Balancing data with human factors

Bill Berman, CEO and founder of Berman Leadership, has coached executives through billion-dollar pipeline decisions – and watched perfect models fail spectacularly. The common thread? Leaders often forget that human factors can invalidate data.

“Most candidates focus on clean data stories,” Berman explains to TestGorilla. “But real leadership requires understanding when psychological and organizational dynamics invalidate even solid numbers.”

He recalls working with a biotech CEO who had compelling market research. On paper, the launch looked bulletproof. But in meetings, the sales team crossed their arms and spoke half-heartedly about the product. The numbers said one thing, the people said another. The CEO paused, rebuilt confidence inside the team, and only then went to market. That decision saved the launch.

This isn’t an isolated case, either. Berman points to pharmaceutical and financial services executives who see this tension daily: data models that appear perfect but collapse when human behavior fails to align with the assumptions.

“Leaders who can integrate psychological awareness with data analysis consistently outperform those who rely on numbers alone,” Berman highlights.

The takeaway is that you can recruit someone who knows every tool in the stack but still fails in practice if they don’t grasp the human side of data-driven decision-making.

Questions to ask in interviews

Tell me about a decision that looked right on paper but failed because of human factors. How did you adjust?

Imagine the data you have supports a decision your team resists. How do you proceed?

Say a past initiative failed despite strong supporting data. What would you do differently?

Principle 3: Adaptability when data surprises you

For Jonathon Nimmons, CEO of WriteSeen, the mark of a strong hire isn’t technical polish, but truth. That’s why his favorite interview question is so unconventional:

“Can you tell us a time when the data told a different story than your gut? Walk me through it backwards: Start with what you didn’t expect in the data, then explain the actions you took, and how it changed your thinking.”

Nimmons uses three key criteria to judge candidates’ answers:

Adaptability: How they adjusted when the data diverged from expectations

Learning transfer: How they took what they learned and applied it to future scenarios

Authenticity under pressure: Whether they can walk through the story backward without slipping into rehearsed answers

The best candidates, he says, “throw their hands up and admit they were wrong.” That humility signals growth. Instead of defending sunk costs, they show they can pivot with clarity.

Speaking to TestGorilla, Nimmons recalls one growth hire who got it exactly right.

The candidate said that they’d initially poured effort into the wrong strategy because it “felt right.” However, the data pointed elsewhere: user behavior revealed a completely different path. Walking through it backward, the candidate demonstrated how they scrapped their ego, adjusted their course, and unlocked real traction.

Nimmons hired them, and that hire went on to design a decision-making system that WriteSeen still uses across content, product, and feature rollouts. “It works because it’s built on truth, not guessing,” he says.

That’s the philosophy at the heart of Jonathon’s interview approach. “Most decision-makers can’t let go of their bias,” he reflects. “They should be listening to the truth that’s right in front of them. Data is their ally, not their foe – and the best hires treat it that way.”

Questions to ask in interviews

Can you describe a time where data conflicted with your intuition? Walk me through it backward.

Describe a project where the data proved your strategy wrong. How did you adjust?

What’s a metric you stopped tracking, and why?

Tell me about a time you had to admit you were wrong. What did the data teach you?

Principle 4: Turning analysis into action

Even the smartest analysis falls flat when it’s buried in a spreadsheet and never acted upon. That’s where communication and alignment turn insights into outcomes.

On r/dataanalysis, one user put it plainly: “Data analysis is a lot about communication and solving puzzles – the technical skills are your utility belt, not the end goal.”

They’re not alone. Another story from the same community reveals a bigger risk:

“In my limited experience, data analysts are just there to give people data that confirms their biases and helps them get their bonuses. Data that goes against that is likely to be ignored.”

These voices echo a universal truth: Great hires aren’t mere number-crunchers. They translate technical findings into decision-ready insights, using data visualization and clear language rather than jargon. They craft stories that leaders can act on – not excuses that end up ignored.

When analysis becomes action, the gap between insights and impact disappears.

Questions to ask in interviews

Before any analysis, how do you define your decision question and success criteria?

A stakeholder believes engagement dropped because of a competitor. How do you test that?

Explain a complex analysis to a senior leader with no technical background.

Skills-based evaluations: The key to even stronger interviews

The leaders we spoke to don’t hire people who parrot metrics or polish dashboards. They hire people who dissect theories, admit when the data proves them wrong, and bring others along when the decision becomes difficult. That’s the difference between a candidate who looks good on paper and one who drives results in the real world.

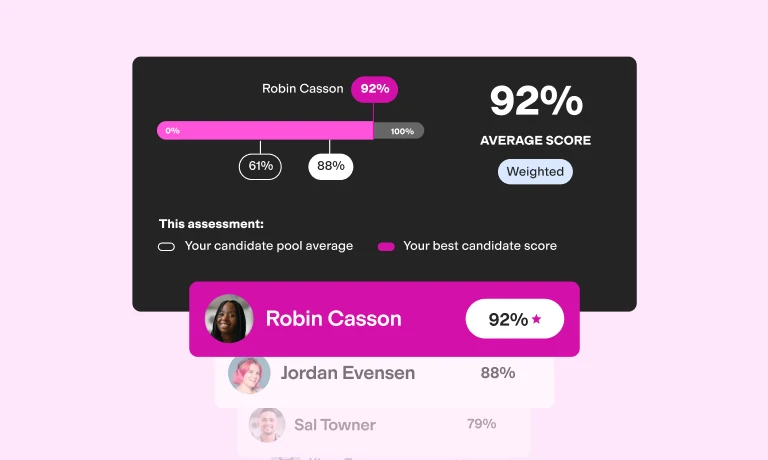

And you don’t need to leave that to chance. The most effective hiring teams test data engineering skills first and conduct interviews second.

Skills-based evaluations candidates who already show analytical rigor, adaptability, and communication under pressure. That means your interview can focus on judgment – how they think when the numbers don’t behave – instead of basic tool knowledge.

TestGorilla makes this process easy. You can start with our Data-Driven Decision-Making test and the Working with Data test to identify candidates who can:

Gather and organize data

Perform data analysis

Critically evaluate data decisions

Communicate findings in a clear, compelling manner

Contribute to a company culture that values data and data analysis

But you’ll want to go beyond simply assessing skills listed in your data analyst or data engineer job description. Pair these two tests with others, such as personality and cognitive ability tests, for a comprehensive assessment of traits relevant to your open role.

From there, your interview questions can uncover how they think when the numbers don’t behave.

Together, testing and structured interviews cut bias, save time, and give you the confidence that your next hire won’t just crunch numbers but will actually drive outcomes.

Ready to find data-driven thinkers who can turn analysis into action? Start your free plan with TestGorilla or book a demo today.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.