Not so long ago, recruiters were manually reviewing hundreds of job applications and reading resumes one by one.

It’s no wonder they were at their wits’ end. Recruiters have been quoted saying they’ve experienced burnout more than once in their careers, and describing the hiring process as a repetitive doom loop.

So when AI entered the picture, it felt like a saving grace. AI resume scanning tools promised to cut hours of manual work – all while surfacing the best talent. That’s what made it so irresistible to experts like Fatima Malik, Head of Recruitment at Career Pro Recruitment agency.

Fatima jumped on the bandwagon. “Early on, we saw a clear benefit,” she says. “Time-to-shortlist dropped by 40% for roles like software developers and customer support leads, where we’d receive 300+ applications.”

Unfortunately, it didn’t take long for the cracks to show. Fatima recalls how the “AI kept deprioritising qualified female candidates in engineering roles, likely because it was trained on historical hiring data that skewed male.”

Yes, the seemingly “objective” AI resume scanner, built into most recruitment platforms and applicant tracking systems, was prone to bias – among other issues.

So how did Fatima and other industry experts tackle these problems? In this tell-all, they open up about what went wrong, what changed, and how AI resume screening is finally helping them with faster and fairer hiring.

The rude awakening: Algorithms aren't perfect

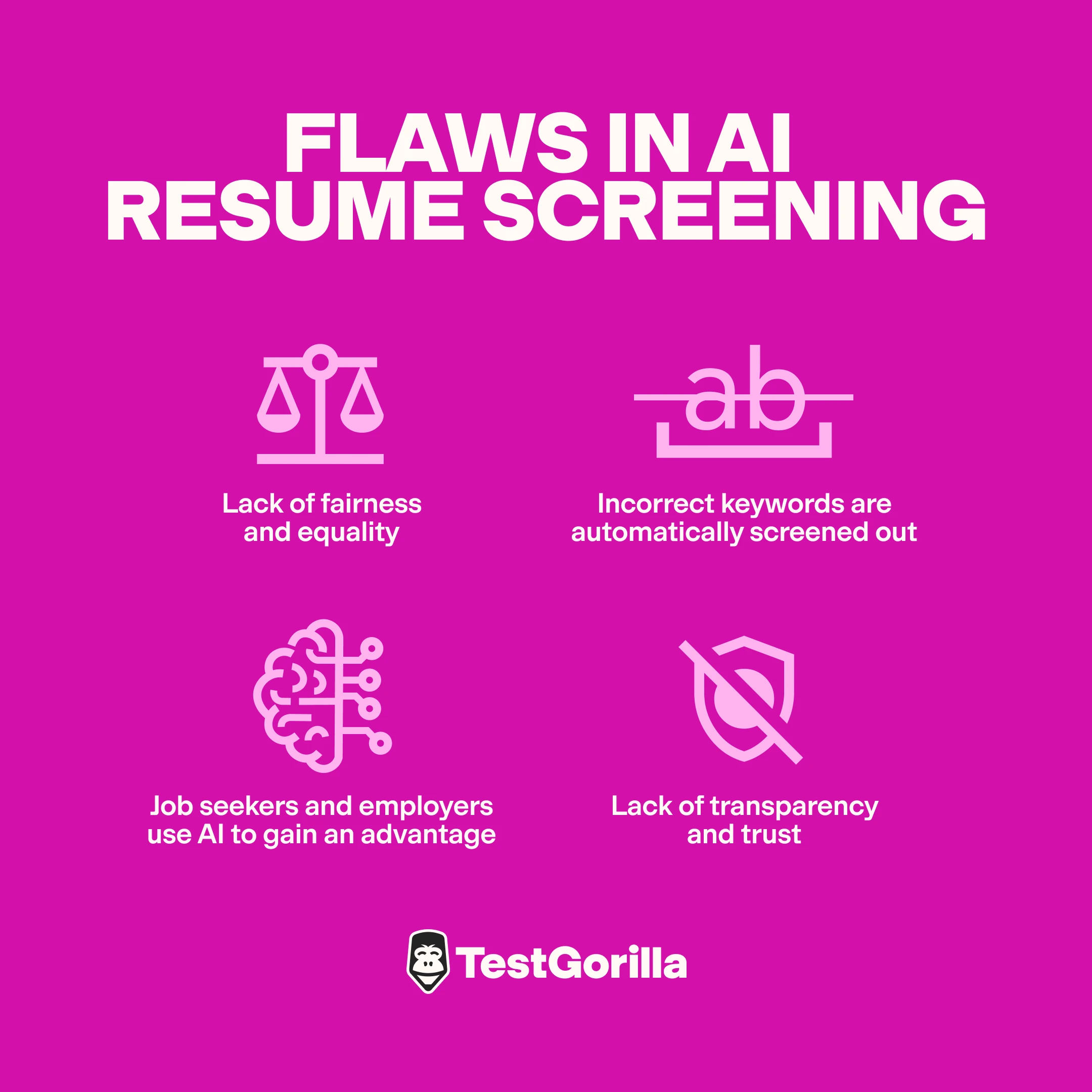

There's no denying that AI resume screening has turned hours of manual work into minutes. But it's not without flaws.

Here are some key issues that have cropped up over the years.

The Amazon wakeup call

In 2016, the White House Office of Science and Technology Policy (OSTP) released a landmark report exploring how data-driven technologies affect fairness and equality across multiple areas, including employment.

The report warned that “because [algorithmic systems and automations] are built by humans and rely on imperfect data, these algorithmic systems may also be based on flawed judgments and assumptions that perpetuate bias as well.”

Lo and behold, two years later, those fears materialized. Reports discovered Amazon’s AI resume screening systematically penalized resumes that included the word “women’s” – e.g., “women’s chess club captain” – and downgraded graduates of all-women’s colleges.

Having been trained on 10 years of resumes submitted to Amazon, most of which came from men, the AI tool favored male candidates for these tech roles.

Unsurprisingly, Amazon decommissioned it. But the story shook the confidence of employers and AI specialists alike, prompting further discussion into how bias can creep into AI hiring tools.

AI policy expert Miranda Bogen writes in Harvard Business Review: “At first glance, it might seem natural for screening tools to model past hiring decisions. But those decisions often reflect the very patterns many employers are actively trying to change through diversity and inclusion initiatives.”

The keyword trap

Most AI resume screening tools work like search engines. You upload a job description, and the tools scan resumes to find keywords that match.

For example, if your role description asks for accounting skills, AI tools use a technique called natural language processing (NLP) to detect resumes that include the word “accounting.” Resumes with the “right” terms are ranked higher, and resumes that don’t explicitly contain the required keywords are often automatically screened out.

Sasha Berson, Co-Founder and Chief Growth Executive at Grow Law, warns that this is a problem because “when the screening criteria are too rigid or opaque [...] you risk missing great candidates who don’t perfectly match keyword patterns.”

Research supports her concerns. One study found that these systems struggled to “precisely detect and extract relevant skills” in resumes and job posts.

For instance, the keyword-matching tool might scan a job description for a clinical data manager, only pick up the word “data manager,” and shortlist profiles with no clinical experience. Here, it’s looking for the wrong thing altogether.

Conversely, it might be looking for the right thing, but there’s a gap in domain knowledge. For example, when looking for the keyword “data visualization,” the tool might reject a highly skilled candidate who lists Tableau and Power BI on their resume but doesn’t explicitly write “data visualization.”

The same goes for LinkedIn’s Boolean search. It relies on keyword matching logic to screen candidates’ profiles (which, for practical purposes, are essentially public resumes). Lauren Salton, TestGorilla’s talent acquisition expert, explains, “It gives you keywords… it doesn’t tell you if that person has that experience now or if they did it 20 years ago. Somewhere on their LinkedIn profile, they mention that they’ve got Python skills – how does that help me?"

Essentially, keyword-based models – embedded in most resume scanners and applicant tracking systems – don’t always understand context. They’re good at finding words, but even the slightest nuance could cost you some really solid candidates.

The resume arms race

As AI resume scanners were gaining clout, generative AI tools like ChatGPT crashed the party. Candidates can now upload job descriptions to genAI, which can either tell them which keywords to focus on or write full-length keyword-optimized resumes for them.

Firstly, this makes it much harder for both recruiters and their AI screening assistants to determine who’s capable and who’s simply gaming the system with an optimal-looking resume.

Secondly, it completely removes humans from the equation. In our discussion, Andrew Conlon, Founder of fitthejob.com, rightly points out the irony:

“While many businesses are increasingly using AI to screen resumes, millions of candidates are also using AI to write their resumes. This has introduced a massive machine-to-machine feedback loop, where employers and job seekers are no longer communicating with each other and are leaving the entire conversation to the bots.”

Another issue is that some genAI tools have been found to fabricate or exaggerate details on candidates’ resumes just to match role descriptions. Without human intuition, it’s harder to detect these lies.

For instance, a human reviewer might spot a mismatch between “managed a team of ten” in one section and “individual contributor” in another, but AI algorithms might just see the words “managed” and “team” and wave the resume through without questioning.

The transparency and trust problem

A big concern with AI resume scanners is that they often operate as “black boxes” – systems whose internal workings are hidden or hard to understand. Recruiters can’t explain how or why AI tools make the decisions they do. This opacity has serious legal and ethical implications, warns Michael Weiss, Partner at Law Offices of Lerner & Weiss:

“When an AI makes hiring decisions, employers often can't explain the specific reasoning behind rejections, which is legally dangerous. I always tell clients that if you can't articulate exactly why someone wasn't hired in terms that would satisfy an employment attorney, you're setting yourself up for expensive litigation.”

This lack of transparency has also led to a trust issue with candidates. Pew surveyed 11,000 American adults and found that seven in ten were against AI making hiring decisions, while 66% claimed they wouldn’t even apply for jobs if employers were using AI in their hiring process.

Are employers cutting back on AI resume screening?

TestGorilla’s 2025 State of Skills-Based Hiring report found that only 67% of employers integrated AI into their hiring process in 2024 – a 17% drop from the previous year. Today, only about 59% use it to screen resumes.

With everything we’ve uncovered, it’s easy to see why employers are losing confidence in AI.

But reducing AI use isn’t the best idea, and Matt Collingwood, Managing Director of VIQU IT recruitment, gives one example of why.

“We can get sometimes 1000s of applications for a non-specialist role. It would be impossible for a human recruiter to review all of these applications in the short time frame they have to return back to the client.”

Pankaj Khurana, VP Technology & Consulting at Rocket, echoes this sentiment: “[AI] gives our team time back in their day to stop doing repetitive filtering, and spend more time engaging candidates and building relationships. Giving AI a little transparency and guardrails to work with, allows teams to work faster, but be fair in how they treat candidates.”

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

The next evolution of AI resume screening

The smartest employers aren’t ditching AI altogether. Some are thinking more carefully about the technology itself, while others are tweaking the way it fits into their hiring process. Here’s how the second act of AI resume screening is taking shape.

Blinding the bias

One study found that blind recruitment – “the act of eradicating certain characteristics from a resume […], for example, name, age, sex, instruction, and even long periods of experience” – is a “proper solution to minimize bias in the recruitment and selection process.”

And industry experts like Guillermo Triana, Principal Consultant and Founder at PEO-Marketplace.com, seem to agree:

“Companies should also consider applying simple preconditions to their data hygiene. Deleting less relevant information such as age or graduation date from candidate profiles, for example, can reduce algorithmic bias ‘leakage’ by up to 40% before a resume parser has even analyzed the info,” Guillermo says.

While this is a great solution, I don’t think it’s perfect.

Recent research looked at what happens when you remove all gender-related terms from a resume – not just the words “male” and “female,” but also anything predictive of gender (words like “salesman,” “waitress,” or even gender-typical hobbies). Findings revealed that while this blinding technique reduced bias, it also stripped the AI resume scanner of other information, weakening the algorithm’s performance.

Does blinding have some merit? Yes, 100% – but you can’t always remove every single identifying detail. What we really need is AI resume screening tools that can strengthen decisions using more than just blinding.

Swapping keywords for fully fleshed-out job criteria

New AI resume screening software screens applicants against fully fleshed-out skills-based job criteria rather than keywords alone. Importantly, the best tools will let you add, delete, and edit these job criteria before any resume screening even begins.

How’s this better than previous AI resume scanners?

Where traditional tools might scan resumes for the keyword “data manager” for a clinical data manager role, and suggest any candidates with “data manager” in their resume – regardless of their sector – a modern AI resume scanner recognizes the difference or at least lets you manually emphasize the clinical context.

On top of this, scoring resumes on job criteria, rather than keywords, leaves room for nuance. If a candidate has significant data management experience but hasn’t used the exact keywords you’re looking for, they still get a fair chance.

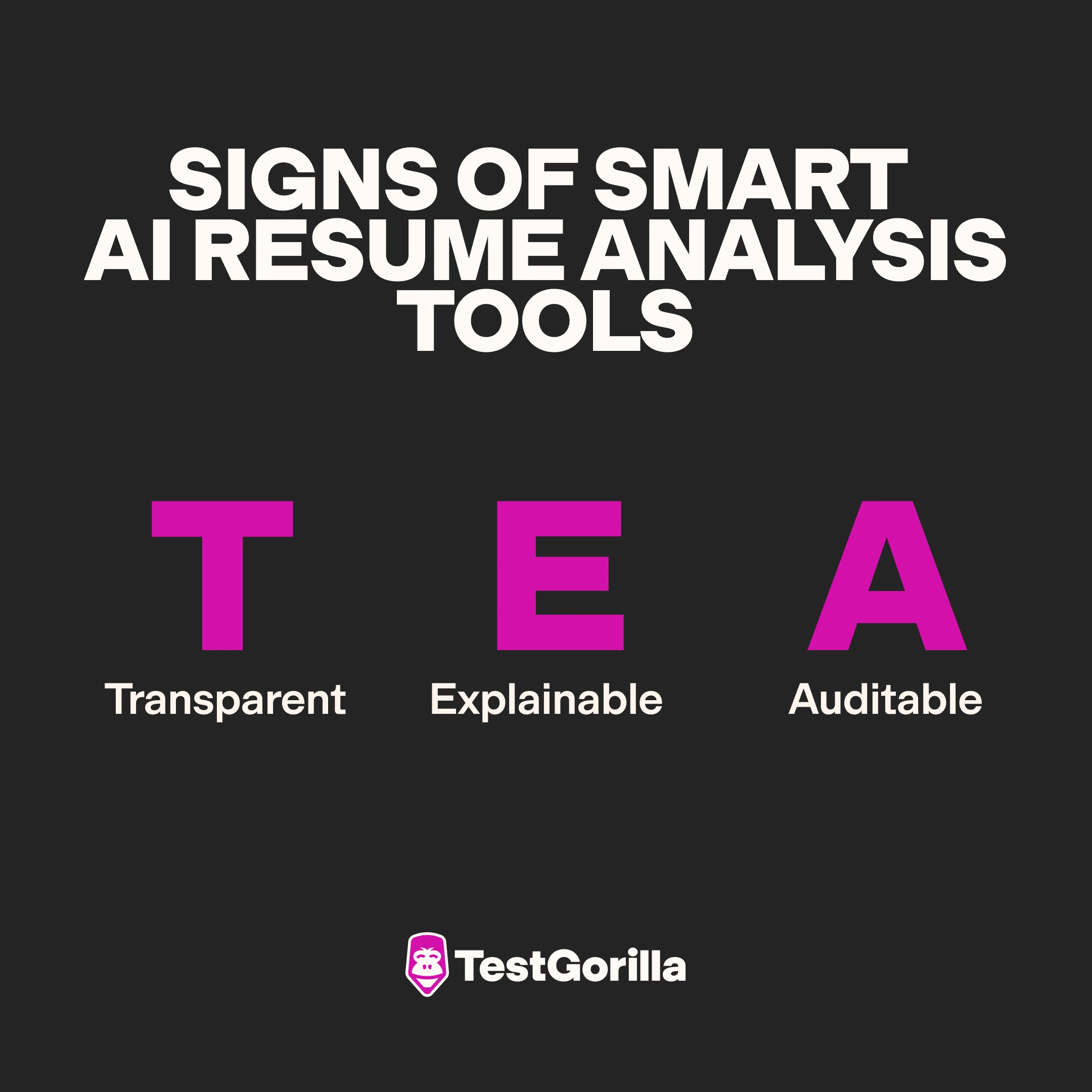

AI resume scoring that spills the TEA

Traditional AI resume analysis tools scan a resume and spit out a yes or no, with no information about how they reach their decision.

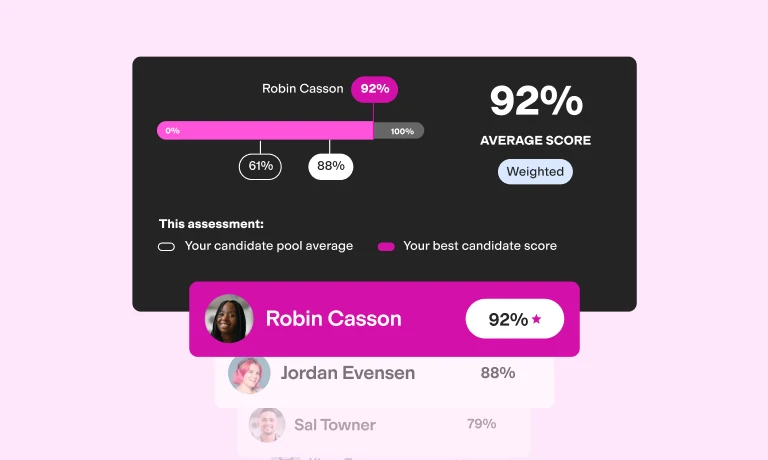

The smarter generation of AI tools doesn’t stop at screening. They score each resume based on how closely it aligns with the job criteria you set.

This marks the end of dodgy and opaque AI resume scoring – it spills the TEA, meaning it’s transparent, explainable, and auditable.

It’s transparent

In our conversation, Guillermo tells TestGorilla, “Companies that publicly disclose what data their sourcing tools take into consideration (especially when it comes to AI model weights), and how that decision making is balanced, will set the standard for others… Fairness will likely be defined not by getting all bias out of AI, but by owning what remains in full view.”

And that’s exactly what AI resume scorers do. Take TestGorilla’s AI resume scoring tool as an example. It evaluates each resume against clear, role-specific criteria that you can set, and gives it a score of 0-5 for each area. The process is completely transparent, making it more likely that candidates will trust it.

It’s explainable

Best-in-class AI resume scoring tools also share why a score was given, and let you adjust scores if you don’t agree with them, or if you feel they were missing some context the AI tool didn’t catch.

This means AI resume scoring tools aren’t black boxes in the same way as earlier models – you can see the logic behind every decision in plain English, which you can use when candidates ask for feedback or in a court of law, even if you don’t understand the technical coding behind the tool.

It’s auditable

The latest AI resume scoring software strips personal details like names, emails, pronouns, photos, and locations, automatically, before scoring resumes – a technique that we’ve already seen works to mitigate the risk of bias.

But it doesn’t stop there; good platforms conduct regular fairness checks of their own algorithms to prevent bias proactively. Finally, they also let you test-drive the scoring system and make adjustments using sample resumes before reviewing actual resumes.

Balancing the AI and human equation

Even with smarter AI scoring systems and bias-blinding techniques, most of the experts we heard from agree that humans still need to stay in the loop. Allan Hou, Sales Director at TSL Australia, explains:

“Human judgement catches the intangibles that make someone brilliant at their job,” Allan says. He cites an example about how AI tools he used continued “to reject candidates with non-linear career trajectories.”

It turns out that 40% of the company’s “absolute best performers” had non-linear career backgrounds, so missing these folks would be a huge loss for Allan’s business.

Guillermo adds that HR professionals’ “qualitative insight into a candidate’s context” is where resume bots can’t replace humans, and this is what justifies these pro’s salaries or billable hour rates in today’s climate.

The point is, even the most advanced AI resume screening tools today don’t necessarily capture context and nuance. That’s why, at TestGorilla, we stress that humans must sense-check and ultimately have a final say in candidate selections.

Proceeding with caution

Resume screeners – both AI and human – can only take you so far with making the right candidate selections because the resume itself is an inherently flawed tool.

According to one survey, 70% of candidates admit to lying on their resumes. Even when they don’t, it’s almost impossible for humans or AI to gauge someone’s real-life capabilities (and especially their soft skills) with 100% certainty from a resume alone. This explains why 86% of US employers told us they struggle with using resumes to screen candidates.

So, how can you strengthen your hiring process knowing this?

The answer is to go beyond resumes. Talent assessments let you objectively measure candidates’ hard and soft skills, cognitive abilities, personality traits, and even their values – closing gaps that both AI and humans can miss.

Resumes have their place, and there are better ways of screening them. We’ve evolved past keyword filters. But it’s crucial to use them as part of a holistic, skills-based assessment when making screening decisions.

The future of AI is context, not keywords

The next phase of AI resume screening is about leaving buzzwords behind and understanding context.

Smarter AI resume scoring tools, transparent logic, skills testing, and a touch of human insight can bridge the gaps that once led to bias, poor screening decisions, and lost candidate trust.

Contributors

Allan Hou, TSL Australia, Sales Director

Andrew Conlon, fitthejob.com, Founder/Product Engineer/Software Developer

Fatima Malik, Career Pro Recruitment Agency in the UAE, Head of Recruitment

Guillermo Triana, Principal Consultant, Founder & CEO | PEO-Marketplace.com

Lauren Salton, TestGorilla, Talent Acquisition Specialist

Matt Collingwood, Managing Director of VIQU IT recruitment

Michael Weiss, Law Offices of Lerner & Weiss, Partner

Pankaj Khurana, VP Technology & Consulting at Rocket

Sasha Berson, Grow Law, Co-Founder and Chief Growth Executive

Ivan Vislavskiy, CEO and Co-founder at Comrade Digital Marketing Agency

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.