Years ago, Dr. Larry Stybel, a retained search consultant specializing in CEOs and board directors, was tasked with finding a CEO for a non-profit company. Since the client couldn’t afford his fee, Larry decided to interview candidates over the phone instead of traveling to meet them in person.

"I sent four candidates I thought the selection committee would want to see," Larry recalls. "But Candidate A was my top choice. Sure enough, A was offered the CEO job."

Later, Larry flew to meet the newly appointed CEO, who wanted help with finding a new CFO for the company. Little did Larry know what he’d see and how it would change his approach to sourcing forever.

"I walked into A's office and he was wearing darn glasses, had a seeing eye dog, and a cane with a red tip! He was legally blind! I realized that had I physically seen him in person or online, I never would have recommended him as a candidate," Larry admits regretfully.

"Because of this experience, it is my policy to conduct initial interviews over the phone and not in person or online. The phone makes me blind to physical imperfections, skin color, and weight," he adds.

When we talk about bias in hiring, many conversations circle around ceilings – glass ceilings that hold women and minorities back from leadership positions, or paper ceilings that block non-degreed candidates from advancing even if they’re talented.

But the truth is, bias begins far earlier. "It sits like a bouncer outside your front door, making arbitrary decisions about who's let in, who gets the chance to prove themselves," explains Yashna Wahal, an HR and hiring expert with 10+ years’ experience.

And if someone as experienced as Dr. Stybel can fall prey to unconscious biases, anyone can. So the question is, how do we fix it?

The first step is recognizing how bias presents itself in sourcing, even where you least expect it. The next is knowing how to reduce it. Let’s unpack!

The usual suspects: Biases you know

Our latest State of Skills-Based Hiring report found that 42% of job seekers experienced bias in hiring the last year, double what we saw two years ago. To uncover what's causing this, I asked industry experts and business leaders:

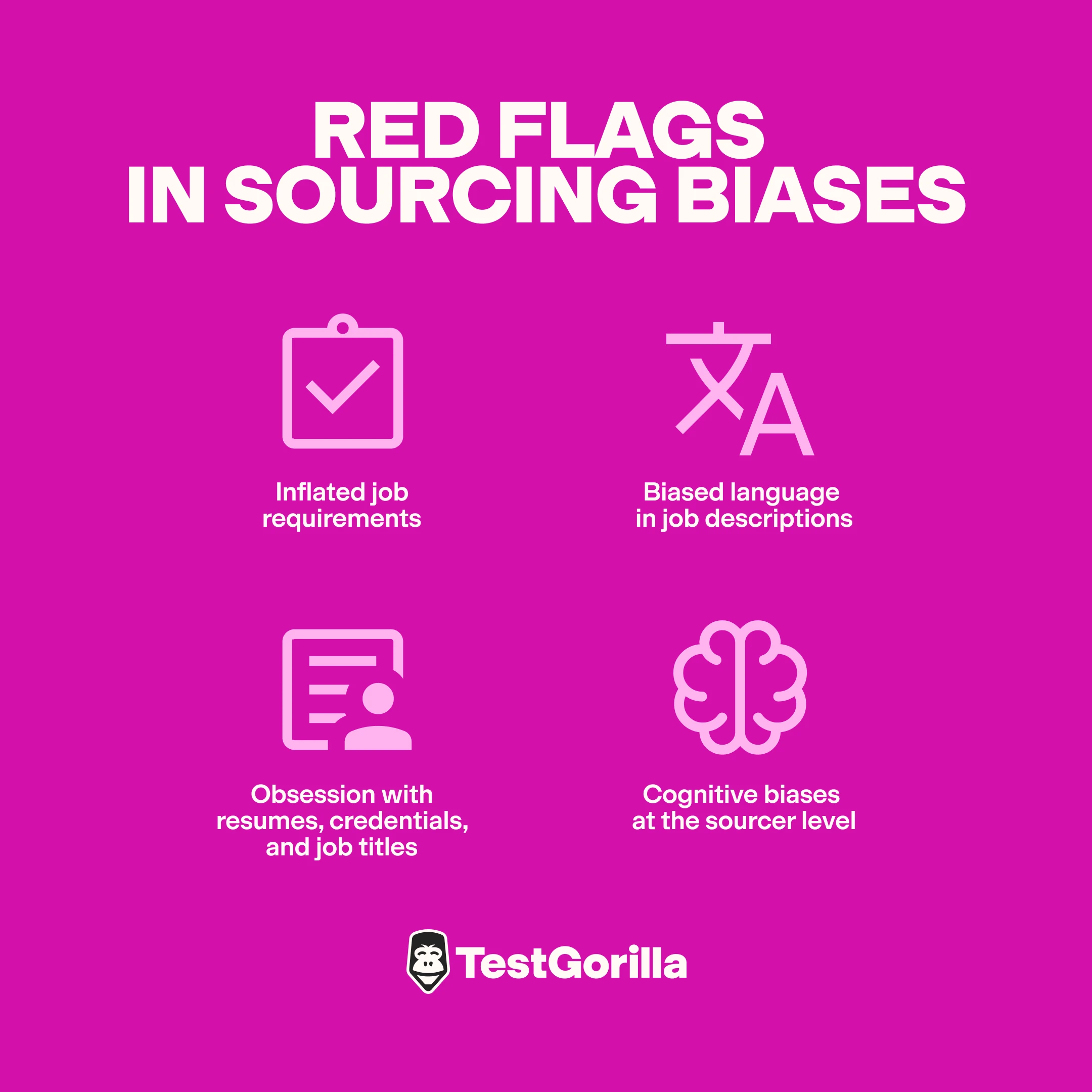

What are the biggest red flags when it comes to sourcing biases?

Inflated job requirements

Lindy Hoyt is a Professional in Human Resources (PHR), and Founder and Principal HR Advisor at People Advisor. She shared that "job descriptions that include unnecessary qualifications [or] overly specific industry experience can unintentionally discourage qualified candidates from underrepresented backgrounds from applying.”

It’s true – things like degree inflation can lead to some of the most common hiring biases we know. Our own TA expert Lauren Salton explains:

"When you list loads of must-haves, female candidates are more likely to look at them and rule themselves out because they don’t meet them at all. Men are more likely to go, ‘'Oh, I meet eight out of ten of those.' So, actually, by putting, say, 15 must-haves, you're not helping the candidate pool that are going to rule themselves out."

Biased language in job descriptions

Whether you’re crafting a job description for an open position, writing about your company on your careers page, or putting out a LinkedIn post, the words you use can signal (intentionally or not) who "belongs" and who doesn’t.

I spoke to Shaun Bettman, CEO and Chief Mortgage Broker at Eden Emerald Mortgages, who provided a real-life example of this.

"In recruitment, the use of job descriptions that require traits such as being assertive or aggressive may discriminate against other genders or different cultures, as they hire different people who may exhibit leadership in other ways. Such practices propagate discrimination and a lack of opportunities."

Obsession with resumes, credentials, and job titles

According to Yashna, "More often than not, sourcers rely on people’s resumes or LinkedIn profiles to make selection decisions....What they’re really looking for, though, is what you majored in, which school you went to, your job title, and what impressive companies you’ve worked for. They’re rarely scrolling down your profile to see what skills you’ve listed."

Shaun explains why this is a problem.

"Excessive reliance on a narrow range of criteria, e.g., excessive emphasis on education during hiring, is one of the [biggest] red flags. This may rule out the entry of skilled people with varied backgrounds who may have acquired experience in unconventional ways but do not have a degree from a renowned university."

Cognitive biases at the sourcer level

In a recent article for Business Insider, Sophie O’Brien, the Founder of Pollen Careers, gives a real-life example of an in-group bias – the tendency for people to favor members of one's own group or community. "In my old job… I'd be looking at CVs, and then somebody would look over my shoulder and be like, Oh, they're an Arsenal supporter, get them in."

Picking someone based on their preferred football team seems like an absurd reason to add someone to your talent pool, but it’s so common. And often, sourcers don’t even know they’re making biased choices because it’s easy to justify them in other ways – like justifying rejecting a blind candidate on the grounds of imagined limitations.

The silent assassins: Biases you don't even think about

All these red flags remind us how easy it is to build walls around talent without meaning to. But there are even subtler barriers that can go entirely unnoticed and diminish any strides you think you're making in fair talent sourcing.

To understand which subtle sourcing biases often slip under the radar, we asked the experts:

What should sourcing teams watch out for when they’re trying to reduce bias?

Narrow selection of sourcing methods

The experts say that the very platforms you source from can become a barrier to diversity.

Lindy, for example, told us "bias can also be reinforced when organizations rely too heavily on a narrow set of sourcing channels, such as the same job boards, the same professional networks, or the same universities. This approach often results in candidate pools that reflect existing demographics rather than expanding them."

Yashna gives an example of what that looks like in action: "Imagine a startup that only sources from an AngelList because that’s where they think all the cool startup talent hangs out. Sure, they might find some young tech talent already plugged into the ecosystem, but they’re unlikely to find older candidates, generalists, or people from outside the US.”

This can happen offline, too – for example, top employers sourcing talent for entry-level jobs from only Ivy League schools.

"You go to these schools, and sure, you'll meet a bunch of highly intelligent and motivated candidates. But a lot of them come from similar backgrounds – big cities, elite families, etc. So by doing this, you're effectively shutting out a massive number of skilled and smart entry-level talent from other backgrounds."

Lack of unconscious bias training

Some companies skimp out on training their staff in sourcing biases. "You can expect team members to actively avoid bias if they don't know what it means or how it manifests in their decisions," Yashna says.

Lindy agrees: "If hiring managers and HR teams are not conscious of the biases they may hold, whether related to background, education, or personality, these can unintentionally influence sourcing decisions from the very beginning."

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

The double agents: Biases masquerading as best practices

In my opinion, the worst of all biases are the ones you push for and invest in without even realizing they're reinforcing bias in your sourcing process.

To learn more about these, we asked the experts: What sourcing "best practices" can actually backfire on employers?

Leaning blindly on employee referral programs

According to Lindy, employee referral programs that don't conduct structured and objective checks are "at a risk of favoritism or unconscious bias.” She explains: “Over time, unchecked referral practices can unintentionally limit diversity by perpetuating existing demographic patterns within the organization."

Yashna explains that with unchecked referral practices, you're not just hampering diversity – you're also potentially missing out on skills, too. “When you're in a rush to fill a role when it opens up, you might blindly pick a referred candidate over a more skilled one without having checked if they're as capable," she warns.

Measuring the wrong kind of "culture fit"

“Culture fit” is one of those recruiting buzzwords that started off with a noble intention but ended up being misunderstood and misused.

Employers should be looking for candidates who'll add to your company's culture, bring fresh perspectives, and align with your core values as a business. Instead, they're just looking for more of the same.

According to Shaun, sourcers "tend to be biased toward those people who share common origins or character traits with the existing leaders." Yashna has seen this too: "I've seen people getting rejected for vague reasons like they weren't 'outgoing enough for this team,' or 'we just didn't click."

Relying solely on personality traits when considering a culture-match is a slippery slope. It can be unfair and shut talented individuals out just because they're different.

(Mis)incentivizing sourcing teams

Although it might be less common, misincentivizing sourcing teams is another way of perpetuating bias. Sarah Doughty highlights it as one of the most powerful but hard-to-see ways in which biases affect sourcing:

"In some ways, the downfall of sourcing began when larger companies started managing it like a sales pipeline. When managed like this, sourcers and recruiters are judged on superficial metrics that don't really relate to great hiring."

When sourcing is run like a sales pipeline, recruiters are likely to focus on volume over depth. If they're assessed on KPIs like how many leads (candidates) they add to the pool rather than the quality and diversity of their pool, they're bound to rely on easy-to-reach and familiar job boards, college names, and so on.

Viewing talent acquisition as an administrative department

A recent report from Josh Bersin Company found that only about a third of TA leaders feel like strategic partners in their firms. One leader even said they're considered a "low-cost fulfillment center."

When sourcers aren't encouraged to consider strategy, develop market knowledge, and think about company goals, they default to the path of least resistance, like sourcing candidates who look like past hires or relying on employee referrals.

Relying blindly on AI for bias-free sourcing

While AI excels at quick social media sweeps, keyword matching, and more, it's not entirely free from bias. In fact, some AI tools have been shown to perpetuate existing biases that already exist in the source data they use for decision-making.

Pooja Kothari, Esq., Founder and CEO of Boundless Awareness, believes that AI "takes on and reiterates these [human] biases" if left unchecked. She explains: “if the technology is fed on oppression, it is oppressive.”

How to disarm biases before they creep into your processes

There’s no control+Z when it comes to biases in sourcing. Once you’ve made unfair selections, they stick with you. It’s best to prevent them right from the outset.

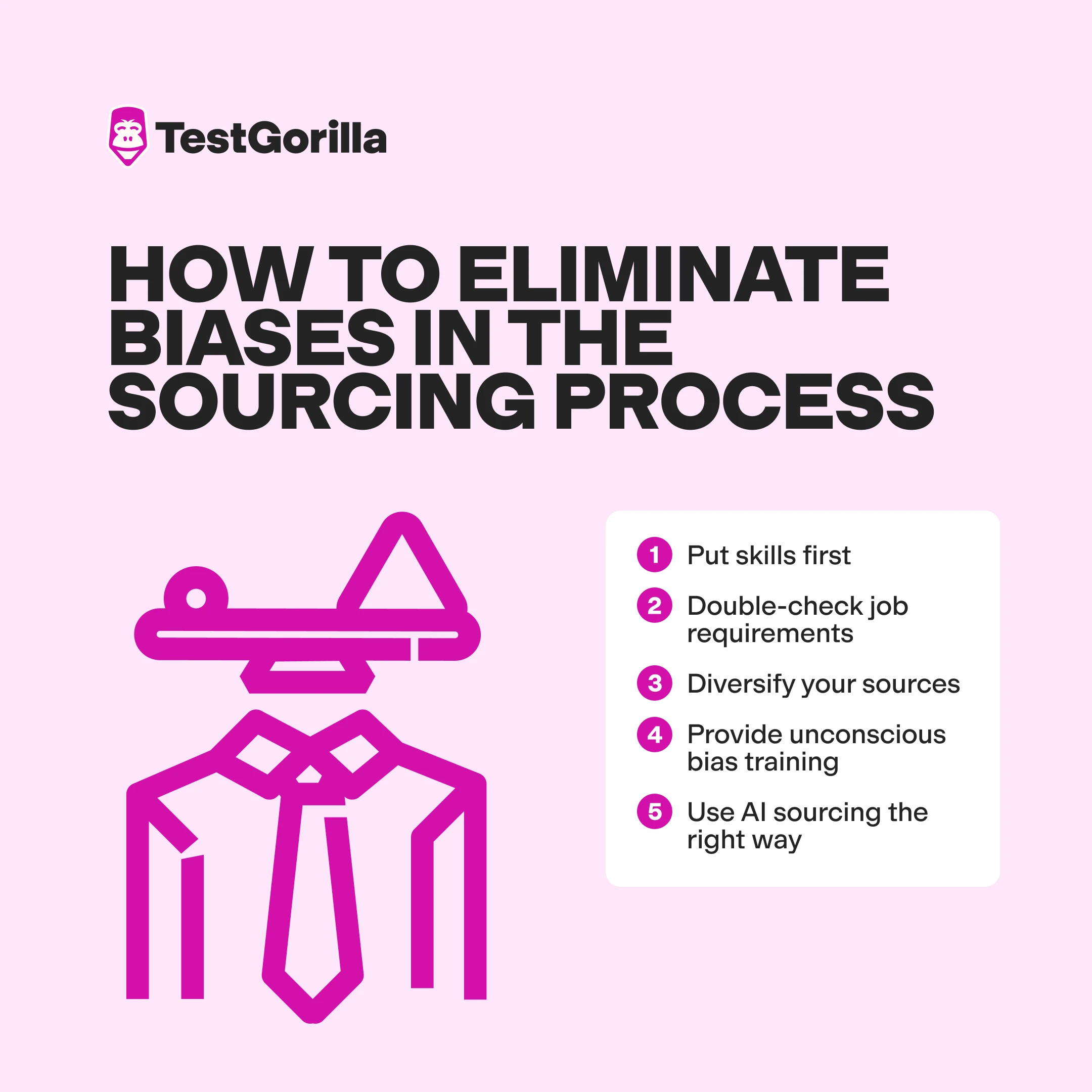

Finally, we asked experts: How can we eliminate biases in the sourcing process?

Here’s what they had to say.

1. Put skills first

Testing candidates’ skills before reading resumes, scanning social media profiles, or acting on referrals can ensure you’re making fair, data-based decisions about who you’re letting into your talent pool.

According to Tracy Thornton, Chief People Officer at the DDC Group and CIPD fellow, "Utilizing tools such as skills assessments or job/task simulations provides a more objective approach to enable good hiring decisions, moving away from a reliance on a gut feeling or the unconscious bias we know can still happen on resume review alone."

Ben Rose, Founder and CEO of CashbackHQ, explains how he’s already seeing the benefits of this.

"We started to focus on genuine activities, testing, and problem-solving. This helped us identify hidden gems that we might have missed otherwise. It's not just about hiring better people when you use skills-based sourcing; it's also about getting rid of bias and making teams more diverse."

While online skills testing and task simulations are great for a handful of active candidates, it’s not practical to do this when you’re sourcing a large number of passive candidates. In these cases, pre-vetted pools like TestGorilla sourcing, that hold millions of skills-tested candidates, offer a way to source quickly and objectively.

2. Double-check job requirements

Yashna advises removing gendered or biased language from your role requirements to ensure you’re staying inclusive. "Pop your JDs into ChatGPT or similar tools and ask it to check for biased language.”

Pooja suggests employers move the focus away from narrow criteria like credentials. She asks her clients to write two sets of job requirements – “one that mandates degrees and one that focuses on what the person can bring to the table in lieu of a degree.”

This simple shift does two things. It helps sourcing teams look beyond degress, and it gets clients to open their minds to those skilled through alternative routes.

3. Diversify your sources

Chris Kirksey, founder of Direction.com, talks about using multiple sources for acquiring talent.

"Going beyond the usual and expanding your talent pool helps in sourcing high-quality candidates who are often overlooked," he says. Tapping into specialized communities and networks and partnering with coding boot camps and local talent pipelines boosted his success rates by 30%.

Meanwhile, Wynter Johnson, Founder and CEO at Caily, started sourcing with an unlikely platform. "One area where we've started looking for talent with surprising amounts of success is TikTok….the platform is a surprisingly good source for experts in many fields, including at-home care for people with special needs.”

4. Provide unconscious bias training

"Creating awareness and equipping teams with practical tools to address these biases is a critical first step toward equitable hiring," explains Lindy. "Regular bias-awareness training, consistent interview process and diverse sourcing channels help prevent unintentional barriers to equitable hiring."

Awareness is the first step to preventing unconscious bias. But sourcing leaders and managers need to actively step in to support their teams. Sarah, for instance, says she’s found success this way: "I give my team a lot of support to make the push for the candidate with the non-traditional title."

5. Use AI sourcing the right way

Yashna explains that while AI can bring its own biases into sourcing, it's still one of the most promising routes to sourcing fairly and at scale, if you incorporate three things: the right tools, a variety of data, and human checks.

Shaun explains that, to prevent bias in AI-driven sourcing, “it’s essential to make sure that the data which is used to run the tools is bias-free."

He shared an example from the mortgage industry, where “if AI is judging people’s financial suitability based on credit scores alone, it could disfavor some groups undesirably." The solution, he says, was to "employ a wide range of data sources with the consideration of various aspects, such as employment history and financial behavior, to eliminate the use of biased historical data."

The same applies to sourcing – asking AI to source based on a range of factors, such as skills, behaviors, etc., rather than overly specific keywords or education requirements, can reduce biased results.

"It’s also imperative to have human oversight," Shaun warns. "Although technology can come in handy in analyzing big data, it should not be the sole basis of making final decisions."

Related posts

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.