Once upon a time, a young recruiter wished to be bestowed with an all-powerful tool. One that would solve all their recruiting woes and help them find the perfect candidate without ever reading a resume. Their wish was granted by a stack of AI tools – shiny, expensive, and stitched together in haste. Each promised brilliance in a different way. But, once the switch was flipped, the monster lurched forward… loud, erratic, and without direction.

Sound familiar? However much we want to believe that the magic of AI equals better hiring, disconnected tools speaking different languages just fuel inconsistency. Sourcing says one thing. Screening says another. Interviews tell a third story. But, without a common language holding it together, what you get isn’t intelligence – it’s noise. Faster, but still noise.

So, how do you manage the chaos in your favour?

We’ve spoken to four hiring and recruitment experts to learn how high-performing teams are using a skills-first approach to data to link AI tools and turn fragmented information into coherent, actionable insights.

Why AI alone can’t save broken hiring

Without the right foundations, AI simply amplifies legacy problems with your recruitment process. To understand why, our experts told us, you need to look at how those systems actually interact day to day.

No common language

The most common and significant breaking point of AI stacks is the disconnect between multiple applications. Bob Gourley, Chief Technology Officer of OODA and author of The Cyber Threat, describes the resulting confusion:

“One tool might focus on a candidate’s potential, another one looks at their past work, and a third one might highlight something unrelated. They all have different opinions. So, who do you believe? The recruiter ends up spending more time figuring out these mixed messages instead of talking to candidates. The technology makes the job harder instead of easier, which slows everything down a lot.”

This insight hits the nail on the head – when there’s no common language, each system produces its own version of the truth. The burden of reconciliation falls back on recruiters, turning “automation” into yet another manual task.

Operational paralysis

The extra effort required to align these disparate tools, along with the associated costs, cannot be underestimated. Emma Williams, Organizational Psychologist and Research Officer at HIGH5, estimates that “recruiters burn 40% of their time reconciling these disconnected systems.”

Similarly, Volen Vulkov, Cofounder of Enhancv, told us that a company he was called in to audit had doubled its time to offer “from 22 days to 42.” The cause of this, he says, was the extra troubleshooting required because siloed AI systems – each handling a different step of the hiring process – didn’t “play well with each other.”

This breakdown is called operational paralysis, and it’s where technology adds friction to a process, rather than removing it. Those 20 additional days may seem little more than an inconvenience on a spreadsheet, but they translate materially into lost productivity and stretched workloads for existing teams.

The lesson is clear – without a common foundation, AI stacks can actually slow hiring down, or make it even noisier.

Blind spots, or hiding-in-plain-sight spots?

Understanding that AI recruitment tools aren’t a cure-all solution is fair enough. But to use AI in a way that actually improves hiring, you also need to understand which parts of your process lack visibility. In reality, these blind spots are hiding in plain sight and can be found at any handoff or workaround where recruiters or managers need to manually intervene.

If you don’t know where these hidden gaps are, implementing AI “solutions” will cement them into your workflow, making it even more difficult to understand what’s actually slowing you down. Not only that, but it also puts you at risk of poor hiring decisions and compliance risks.

Let’s dive deeper into some of these AI blind spots to find out what teams are getting wrong and what the best hiring managers are doing differently.

Keywords are the new buzzwords

AI is an echo chamber, brilliant at bouncing your ideas back at you with added conviction. As a result, candidates who are confident writers or skilled at mirroring job descriptions tend to rise to the top, regardless of actual capability.

Volen Vulkov has seen this firsthand:

“I coach thousands of applicants every year who face AI screening and learn how to build resumes that pass them. The entire practice of building resumes to pass AI screening has pushed applicants further away from authenticity and more towards deriving a proven psychological math formula.”

This almost scientific focus on keyword manipulation means the best candidates don’t always succeed. Instead, those who attended elite schools or universities where employment skills are taught as part of the curriculum – or those with the means to hire resume writers – will be favored by these language-led algorithms.

Not only does this reinforce inequalities, limiting social mobility, but it can also lead to poor hiring decisions by rewarding how well someone presents themselves, rather than their actual skills.

Bias, but automated

Many of our experts expressed their concern that AI-driven hiring is becoming less fair, not more. As Emma Williams says, “Fairness can never be there because one tool screens for keywords, another evaluates video responses, and a third scores assessments. Quality suffers because of measuring only fragments.”

Bob Gourley agrees that using disparate AI tools without a guiding framework erodes fairness. “When there is no common way of doing things, there are also no shared rules,” he says.

From my perspective, however, this isn’t really an AI problem: It’s a human one. AI can be consistent when it’s given clear, shared rules. The real issue is how humans design and combine these tools. Left unchecked, the AI echo chamber scales human judgment – for example, what “good” or “bad” looks like – at machine speed. This includes our blind spots, like our unconscious biases, which then turn into automated biases. If you’re recruiting at scale, this can snowball quickly, impacting employer reputation and workforce diversity.

Further, the concept of fairness goes hand in hand with explainability. Hiring managers need to be able to clearly justify their selection decisions. When they can’t, organizations are exposed to real liability.

Alice Duffy of Matheson Law Firm explains that this matters from a discrimination perspective:

“An employer must be able to show the reason it took a particular action, such as a decision not to recruit a particular applicant. The difficulty here is that the internal workings of the AI system are often invisible to the user, and it can be particularly challenging to unpick why a decision was reached by AI and the importance assigned to various factors.”

Put simply, if an employer cannot explain a hiring decision, there’s no evidence that it wasn’t biased or discriminatory. The burden of proof falls back on you, the employer, leaving your business open to discrimination claims, compliance failures, and irreparable damage to your brand.

This is where a skills-first AI stack shifts the conversation. It replaces invisible decision logic with defensible standards and evidence of candidate capability. This makes any AI-supported hiring choice easier to justify and far less exposed to reputational or legal fallout.

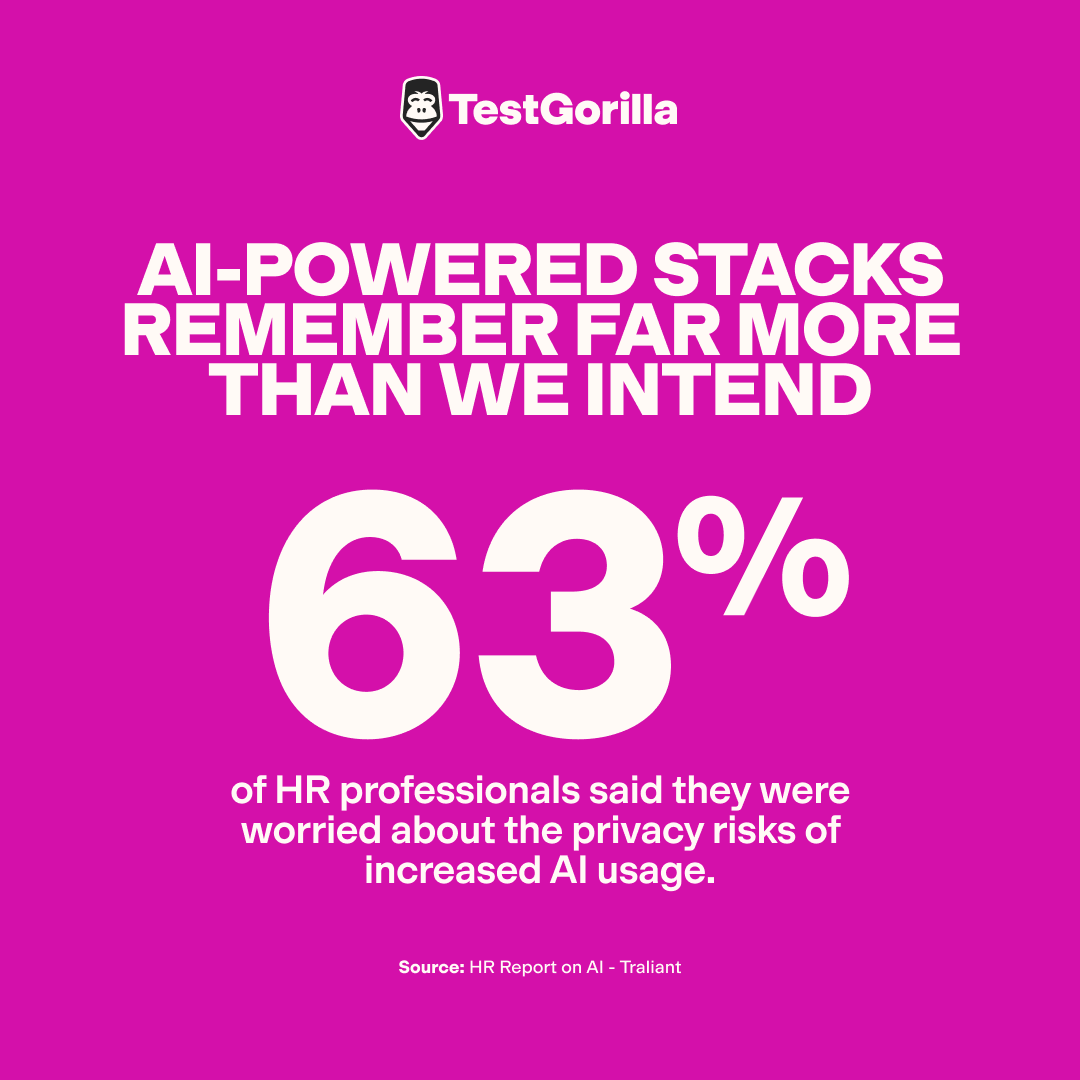

AI doesn’t forget

Another issue with AI-powered stacks is that they remember far more than we intend. The nature of large language models (LLMs) is that they learn by absorbing patterns and applying them repeatedly. That means it will repeat historical behavior, even if that doesn’t reflect your hiring practices today.

AI can also remember sensitive information about your candidates. Anything you feed into an AI-powered program will be processed as part of its learning context, raising real questions about data retention, reuse, and long-term exposure. In a 2024 survey, 63% of HR professionals said they were worried about the privacy risks of increased AI usage.

Unfortunately, if you’re going to use AI, it’s nearly impossible to get around this. Although some AI tools, like Microsoft Copilot, market themselves as operating in a “ring-fenced environment,” they will still occasionally connect to the internet if they determine that something in your question needs external context to provide a meaningful response. This challenges the notion of complete data separation, and shows you can never really rely on default settings to protect sensitive information.

Retaining this historic information means sensitive candidate information can stay in circulation, resurface unexpectedly, and be reused in ways employers never intended or approved. This can also mean that outdated or biased practices can leak into your hiring patterns, detracting from what really matters: candidate capability.

The answer is to flip the focus. In a world of infinite AI “shortcuts,” skills should become the common thread holding the hiring stack together. Without this, you might find yourself with a faster process but a weaker foundation. Read on to learn more about implementing a skills-first AI stack.

What is a skills-first AI stack?

A skills-first AI stack is a collection of AI recruitment tools, such as resume screeners, tests, or AI-powered interview technologies, designed to work together around a shared framework of role-specific skills, rather than inconsistent definitions of “good.”

At its core, a skills-first AI stack evaluates candidates on what they can actually do, rather than what they claim on paper. It prioritizes demonstrable qualities like abilities, capabilities, knowledge, and practical skills through skills-based assessments and structured interviews, rather than relying on resume buzzwords or traditional measures like college degrees or job titles.

What’s more, by aligning the stack to a job-relevant framework through a common skills-based “language,” every tool pulls in the same direction, ensuring consistency and supporting defensible hiring decisions.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

So, how do you implement a skills-first AI stack?

From speaking to our experts, we’ve determined that there are three core steps to creating a coherent, skill-first AI stack.

1. Don’t forget to KISS

That’s “Keep It Simple, Stupid!” As Bob Gourley told us, there’s no point in having (and paying for) multiple AI tools that all do the same thing, but in slightly different ways. His solution was “to combine three tools into one. This not only made things easier but also helped them work together better, leading to less confusion and clearer information.”

Volen Vulkov agrees:

“When it comes to predicting hiring processes, few well-connected tools, and those that fit and are shaped around skills-first assessments win every time against elaborate AI technology-hyped skill testing workflows. It makes hiring faster, fairer, and ultimately better employer brand-wise where it fundamentally matters.”

For example, you don’t need one tool that screens resumes, another that validates keywords in a resume, and a third that tries to pull the first two together. Look for tools that work together out of the box, or better yet, an all-in-one platform, like TestGorilla’s, that lets you conduct multi-measure testing, validate skills, screen resumes, carry out interviews, and rank candidates.

So, the advice here is to condense your stack. Pick tools that do fewer things better, instead of many things inconsistently.

2. Double down on skills-first

At TestGorilla, we believe that skills-based hiring is the future. It’s a method of evaluating candidates based on what they can actually do, rather than where they’ve worked, or whether they know which keywords to include. It levels the playing field, preventing candidates who can talk the talk from outshining those who can actually walk the walk.

You can use skills testing at any stage of the employment process, but we find it’s most productive before the resume screen. You can then move on to shortlisting before conducting face-to-face interviews. Using skills tests at this stage uses objective evidence to narrow the recruitment pool, saving the hiring manager’s time by ensuring they only interview people with the genuine skills to do the job.

What’s more, with TestGorilla, you can create your own questions to get down into the nitty-gritty of the day-to-day tasks, ensuring the successful candidate can hit the ground running. You can also edit existing questions, add role-specific scenarios, and combine up to five tests to create your own custom assessments.

If you’re unsure which tests to use, TestGorilla’s AI-powered test suggestor can propose assessments aligned to the skills that actually matter for the role. This is great if you’re hiring for a role you’re unfamiliar with, or just want a little inspiration to guide your thought process.

3. Combine with intention

Don’t get us wrong – technology is great. It can really take the heavy lifting off your plate, freeing you up to do work that really matters. But the next phase of AI-powered hiring isn’t about adding more tools, or even just about connecting them better; it’s about finding the right way to blend skills testing data with the human element.

Combining with intention means thinking carefully about the impact each tool, integration, or stage has on decision-making. Consider where you can afford to automate and where human judgment must stay firmly in the loop. While some parts of hiring benefit from speed, others benefit from pause – and allowing a little accountability where necessary will always save time in the long run.

In practice, you’d start by using AI to assess core skills, helping remove noise and bias from the initial screen. Then, move on to a human pressure-test, using business knowledge and structured interview questions to add context, nuance, and accountability before making an offer. Automation can speed up shortlisting, but humans should own the decisions where risk and impact are highest.

So, take time building your stack, combine with intention, and design a process you can explain, defend, and trust.

AI stacks: modern fairytale, or cautionary tale?

AI stacks aren’t broken, but your approach to using them might be. When you bolt disconnected tools onto legacy workflows without a shared brain, you get a Frankenstein’s monster of automation. It’s unpredictable, moves fast, and makes noise. And just like in the original story, the monster acts on what it was taught. Left unchecked, it could really hurt someone – by amplifying bias or unfairness – and you’ll be the one with the wrench in your hand when the regulators come knocking.

So, instead of chasing the next shiny AI feature, choose a skills-first approach that turns chaos into consistency. Make sure every tool speaks the same language, tied to a common, role-specific framework that evaluates abilities rather than buzzwords. Keep your stacks small, combine with intention, and don’t be afraid of a little human intervention. Heed the moral and lead with skills, or don’t be surprised if your monster turns on you.

Contributors

Bob Gourley - OODA, Chief Technology Officer

Emma Williams - HIGH5, Research Officer

Volen Vulkov - Enhancv, Cofounder

Alice Duffy - Matheson Law Firm, Partner

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.