Hiring’s dirty little secret: AI runs on human intelligence (and all the bias that comes with it)

AI first hit the hiring scene with a bang. It felt like the answer to every recruiter’s problems. It promised to speed up agonizing manual tasks like resume screening, remove human error from the process, and, above all, neutralize bias that had plagued hiring for far too long.

Tijana Tadic, TA partner lead at Gentoo Media, recalls the anticipation, with many teams believing AI was “‘neutral’ or comprehensive enough to handle hiring independently.” Milos Eric, general manager at OysterLink, also frequently encountered “the belief that AI is […] fair the moment it is turned on.”

But it didn’t take long for the shine to wear off. Hiring teams quickly realized that these tools weren’t as objective as they were believed to be. Edward Hones, owner of Hones Law, shares how he started seeing “bias emerge in subtle ways:”

“Resume screeners that downgrade candidates who attended certain schools, matching tools that inadvertently favor traditionally male career trajectories, or interview analyzers that misinterpret communication styles tied to neurodiversity or cultural background.”

Honestly, we’re lucky these problems surfaced before humans handed over the hiring reins entirely to AI. According to Hones, “These issues only came to light because people questioned unexpected patterns rather than assuming the algorithm was correct.”

The good news: Since uncovering these flaws, experts have asked even more questions, connected the dots, and now have a clearer understanding of why AI discriminates in the first place. These insights have opened the door to promising AI hiring solutions that can beat the bias out of automation once and for all.

Table of contents

- Cutting the BS: AI isn’t magic; it’s a sleight of hand with humans still doing the invisible work

- Hiring bias: It’s not AI, it’s you

- Zooming in: How this human-born, AI-replicated bias shows up in hiring

- But it’s not all doom and gloom: Humans can beat the bias out of automation

- Successful automation takes both human and machine

- Contributors

Cutting the BS: AI isn’t magic; it’s a sleight of hand with humans still doing the invisible work

First, to understand why AI sometimes acts unfairly, you need to know how it actually works. And this is where people get things really wrong.

Many think of AI as a self-running machine or a robotic force that automates everything on its own, without human intervention. But this couldn’t be further from the truth. AI doesn’t work out of thin air. In reality, it runs on quiet, unseen human labor happening behind the scenes.

AI models like resume screeners or video analyzers learn from past hiring data, which reflects human decisions about who to progress or reject.

Additionally, there are actually people who actively train AI models. For example, they tag and sort information so the AI knows what “good” versus “bad” resumes or interview answers look like.

They also read and rate candidate answers or sample text so AI tools can copy those patterns. Moreover, some people moderate content to teach AI algorithms how to flag language that seems harmful, misleading, or inappropriate.

But who does all this work? According to the International Labour Organisation (ILO), remote, low-cost crowd workers earning less than $2/hour undertake these micro tasks on unregulated platforms where anyone can sign up, there’s no employment relationship, and pay rates are unmonitored.

Why does this matter? One study found that low pay in AI and ML (Machine Learning) annotation tasks affected the quality of output. Workers produced careless responses as a result of rushing through tasks to maximize their earnings.

Further, I doubt these workers are trained in concepts like bias, fairness, or cultural nuance, in which case, the training they provide AI models with could be flawed right off the bat. This makes me wonder: are we the source of bias in AI?

Hiring bias: It’s not AI, it’s you

When you understand how AI really works, everything starts to make sense. Human intelligence powers the technology but also warps it.

Hones explains this well when he says AI isn’t neutral because “it reflects the assumptions, data, and design of the humans who built it.”

Jim Hickey, president and managing partner at Perpetual Talent Solutions, agrees and adds that because of this, “[AI] remains just as preferential as humans. Worse, it can very quickly replicate these biases at scale.”

If you ask me, that’s where the real danger lies. While a single biased decision by one human affects a handful of candidates, a biased algorithm can affect thousands.

The best insights on HR and recruitment, delivered to your inbox.

Biweekly updates. No spam. Unsubscribe any time.

Zooming in: How this human-born, AI-replicated bias shows up in hiring

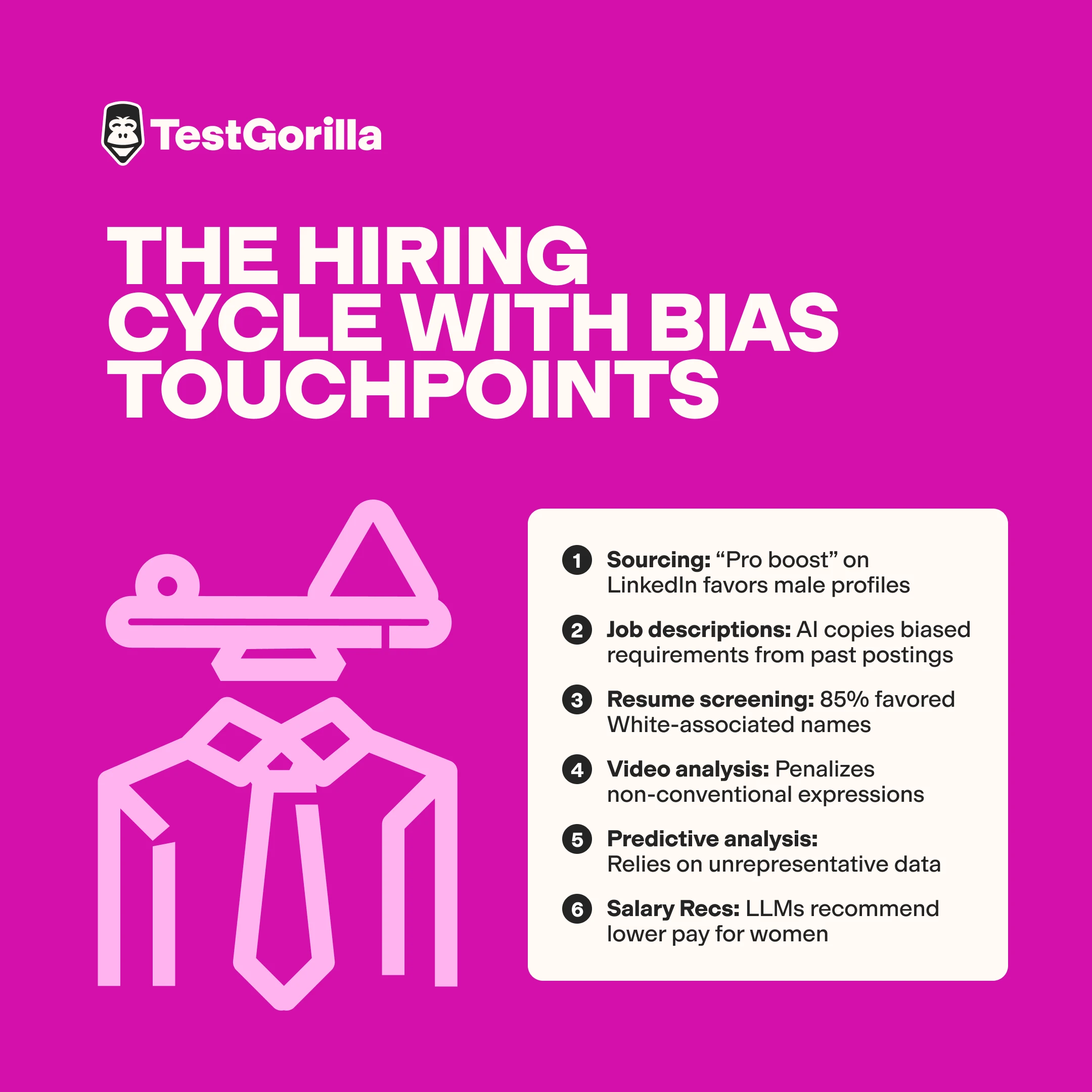

Understanding what causes AI bias in hiring gets you one step closer to finding a solution to fix it. But before that, it’s also critical to learn where exactly this bias shows up in the recruitment cycle (hint: contrary to popular belief, it's not just in the screening stage).

Understanding what causes AI bias in hiring gets you one step closer to finding a solution to fix it. But before that, it’s also critical to learn where exactly this bias shows up in the recruitment cycle (hint: contrary to popular belief, it's not just in the screening stage).

Sourcing and talent discovery

Algorithmic bias can show up as early as the sourcing stage – long before a job seeker even applies for a role. Today, most sourcing happens online through AI-assisted tools built into professional networking sites and job boards. While these platforms feel convenient and provide access to millions of skilled candidates, they’re far from perfect.

Let’s take LinkedIn Recruiter, for example. Many recruiters rely on the Boolean search functionality to source candidates whose profile mentions specific keywords, such as job titles, degrees, skills, location, etc. But some of the experts we spoke to say LinkedIn doesn’t just rank based on keywords.

Kira Byrd, chief accountant and compliance strategist at Curl Centric, tells TestGorilla, “Engagement and connections have a greater impact on search results.” And Nick Derham, owner of Adria Solutions, echoes this: “The algorithm seems to favour users who are more active or already have high visibility.”

But here’s where the bias angle kicks in: LinkedIn has recently been criticized for what many are calling the “bro boost.” Multiple women claim their LinkedIn traffic increased when they changed their gender pronouns to he/him, switched the gender on their profile settings, or asked ChatGPT to make the language on their profiles more “male-like.”

LinkedIn denies these allegations, and not everyone has had the same experience. But the anecdotal evidence continues to stack up.

So let’s do the math: If men’s accounts get more visibility, and the algorithm rewards more engaging profiles, you can guess who shows up first in recruiters’ searches.

Meanwhile, in a parallel universe, even job boards like ZipRecruiter are being scrutinized for sourcing bias. A recent Harvard Business Review article claims that the platform automatically learns recruiters’ preferences and uses this data to source similar profiles for searchers:

“If the system notices that recruiters happen to interact more frequently with white men, it may well find proxies for those characteristics (like being named Jared or playing high school lacrosse) and replicate that pattern. This sort of adverse impact can happen without explicit instruction, and worse, without anyone realizing.”

Job descriptions

Our latest State of Skills-Based Hiring report reveals that 3 in 5 employers use AI to write their job descriptions.

My hypothesis? If you’re not careful, bias can slip through here as well. I put my theory to the test by asking ChatGPT to write a Chief Technology Officer role description.

While I was pleased to see it avoided gendered terms like “ninja” or “rockstar,” it did ask for “10–15 years of experience in technology leadership roles” – a pattern it likely picked up from traditional CTO postings.

Unfortunately, this kind of requirement can discourage applications from younger candidates, women who’ve taken time out for childcare, and others with non-linear career paths, even if they’re perfectly skilled for the job.

Resume screening

Today, AI and machine learning tools are used to parse resumes, find candidates who match their desired keywords or criteria, and even decide who makes it to the next stage.

And according to Eric, this is where AI’s inherited biases tend to show up the most. “The algorithm constantly favors similar career paths, or favors the traditional model and excludes the non traditional background,” he explains.

Here are some examples of biases that surface during AI resume screening.

Gender bias

Amazon previously scrapped its ML resume screener after discovering that the system penalized resumes containing terms like “women” or “women’s chess club.” Having been trained on 10 years of the company’s historical hiring patterns, it simply copied how decisions used to be made.

Socio-economic bias

A Harvard Business School report found that AI screening tools built into applicant tracking systems relied heavily on proxies like college degrees to make judgments about a candidate’s skills and abilities.

Lacey Kaelani, CEO & co-founder of Metaintro, also touched on this: “[If] an organization traditionally hired from certain colleges or individuals with certain job titles on their resumes, the AI will train and learn on examples of what your ‘good candidate’ looks like.”

This means these tools are more likely to prioritize candidates with degrees and exclude those without access to higher education, creating unnecessary social mobility barriers.

Racial bias

A recent study examining more than 500 resumes across nine occupations found that some AI resume screening tools consistently favored White-associated names in 85% of cases. And it gets worse – Black male candidates were disadvantaged in nearly 100% of scenarios.

Behavioral video analysis

Some employers use AI behavioral analysis tools to assess candidates’ soft skills and personality over video interviews.

But again, they’re not free from bias, and HireVue is a prime example.

HireVue’s software was designed to judge an interviewee’s traits by analyzing their sentiments, voice, tone, and even facial expressions. But it had to decommission this tool for flawed and biased outcomes.

In one article about this case, Merve Hickok, a lecturer and speaker on AI ethics, bias, and governance, points out that “Facial expressions are not universal – they can change due to culture, context, and disabilities – and they can also be gamed.”

A tool like this could easily reject a disabled or neurodivergent candidate for not portraying the conventional expressions it considers “desirable.”

Predictive analytics on final hiring decisions

Every expert we spoke to unanimously agreed on one thing: AI shouldn’t make the final hiring call.

For example, Tadic says, “We use AI as a supportive tool and a facilitator rather than a decision-maker,” while Eric adds, “In my experience, the best way to keep AI hiring tools fair is to think of them as assistants rather than decision makers.”

That said, the decision stage isn’t entirely AI-free, so to speak. Recruiters often use predictive hiring tools that analyze all the data you’ve collected about a candidate so far, compare it with profiles of previous high performers, and predict which candidates are most likely to succeed in a role.

The problem? Research has shown that these predictive analytics tools rely on “unrepresentative data, historical prejudices, and opaque algorithmic processes,” which leads to unfair outcomes and exacerbates social inequalities.

Salary recommendations

Finally, recruiters are using AI tools to get salary recommendations for their roles. Once again, these tools draw on historical salary data and market benchmarks to estimate what someone should earn, and research confirms that this is a risk.

A recent study found that five commonly used LLMs (Large Language Models), including ChatGPT, consistently recommended lower salaries for women compared to equally qualified men. And the discrimination didn’t stop at gender.

It also recommended lower comp for Hispanics and other people of color, as well as refugees, when compared to White candidates and expats, respectively.

But it’s not all doom and gloom: Humans can beat the bias out of automation

At this point, the evidence is crystal clear. AI isn’t some kind of bias-free shortcut to good hiring. The real magic happens when humans work with AI, making the most of its efficiency and speed while staying alert to potential drawbacks.

Here are some practical ways experts are eliminating AI and automation bias from the hiring process.

Tapping into diverse and vetted sourcing pools

You don’t need to stop using LinkedIn and job boards to source talent. But given the potential bias baked into their algorithms, it’s best to diversify your sourcing strategy to include more objective methods.

For example, TestGorilla Sourcing gives you access to more than 2 million diverse candidate profiles. And the best part is they’ve already been tested for skills. When you’re working with hard data about candidates’ abilities, there's less chance for biased algorithms to influence who makes it into your pipeline.

Putting out fire with fire: Using AI models to fix biased job descriptions

Ironically, while AI tools can replicate bias, you can also use AI to spot and remove it, especially in role descriptions.

In one study, researchers developed ML models to identify five major types of biased or discriminatory language in job postings: masculine-coded, feminine-coded, exclusive, LGBTQ-coded, and demographic or racial language. The model successfully identified discriminatory patterns with high accuracy.

And there are now other models out there that help in the same way. For instance, I popped the CTO role description ChatGPT wrote for me into a tool called “Gender Decoder,” and it pinpointed the exact problematic language (check out my results here).

Alternatively, even well-structured prompts can help you spot issues using ChatGPT, Gemini, or other LLMs.

Choosing AI tools that bring the HEAT (Human-led, Explainable, Auditable, and Transparent)

Not all hiring AI tools are created equal. Some systems are better than others, according to Hones:

“I’ve learned that the most reliable safeguard against AI bias is designing workflows that require humans to review, question, and override automated outputs. AI tools can screen for qualifications at scale, but they can't understand context, career gaps, or systemic inequities the way a trained HR professional can.”

And this is where TestGorilla’s AI resume scoring and AI video interviewing tools really shine.

In both these models, you (the human) set the job criteria that the AI will use to assess a candidate. The AI then scores the resumes (after removing any personal identifiers for extra fairness) or video interview responses against those criteria.

Importantly, it transparently explains why it gave a certain score, and lets you override scores if you know something the AI doesn’t – for instance, if a candidate has a career gap because of caregiving.

Hones emphasizes that these specific aspects – “Structured interviews, calibrated scoring rubrics, and explicit human review of any AI-generated recommendation” – are key to eliminating bias.

And the importance of human review extends to all your AI systems, including predictive analytics or salary recommendation tools. You can, for instance, make subtle changes to a candidate’s gender or race and check if it changes the outcome. If something feels off, it probably is.

Finally, you should ensure the AI tools you use undergo regular auditing instead of a one-time check. Why? Kaelani explains this with a bitter truth: “AI bias is not a one-time fix. Bias will continue to creep back in as the model learns from your new data and any pattern shifts. There's truly no such thing as ‘objective AI.’”

That's why TestGorilla’s AI tools, for instance, are routinely checked to ensure fair outcomes across demographic groups.

Complementing AI outputs with skills assessments

Skills tests give you measurable data on what a candidate can actually do in real life. When you look at this objective data first, or even alongside potentially warped AI outputs, there’s less room for bias.

And multi-measure testing – where you assess candidates’ hard skills, soft skills, personality traits, cognitive abilities, and cultural alignment – is even more solid. It gives you holistic, unbiased data to work with, so you don’t need to rely on behavioral video analysis tools that aren’t quite ready for fair hiring yet.

In fact, 91% of employers who use multi-measure testing say they’re making better-quality hires.

Training hiring teams to recognize bias

Keeping people in hiring decisions is only useful if they know how to detect flawed outputs, biased patterns, or suspicious scoring. That’s why it’s critical to train everyone from recruiters to hiring managers on common bias types, how they surface in AI, and how to fix them.

As a starting point, you can follow the US Equal Employment Opportunity Commission (EEOC)’s formal guidance on using AI in hiring. This way, your team will always be on the lookout, making it less likely for bias to creep in. As Eric rightly says, “Teams that apply curiosity and skepticism see bias show up early.”

Successful automation takes both human and machine

AI has been a game-changer in speeding up hiring. Unfortunately, it hasn’t always been fair because it runs on an engine of human intelligence, which both fuels and distorts it at every stage of the hiring process.

That said, there’s no need to panic or abandon automation entirely. The key is to use it wisely, combining the right tools with human input.

As Hones aptly says, “Ethical AI-assisted hiring doesn’t happen by accident; it happens when organizations pair smart automation with equally smart, accountable humans.”

Contributors

Edward Hones, Hones Law, Owner

Jim Hickey, Perpetual Talent Solutions, President and Managing Partner

Kira Byrd, Curl Centric, Chief Accountant and Compliance Strategist

Lacey Kaelani, Metaintro, CEO & Co-Founder

Milos Eric, OysterLink, General Manager

Nick Derham, Adria Solutions, Owner

Tijana Tadic, Gentoo Media, Talent Acquisition Partner Lead

You've scrolled this far

Why not try TestGorilla for free, and see what happens when you put skills first.